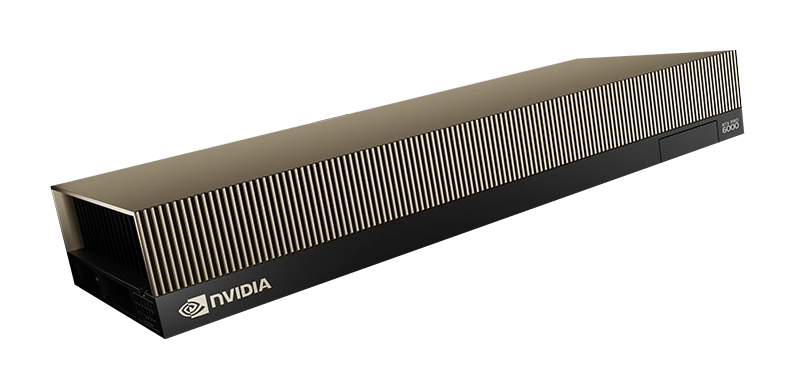

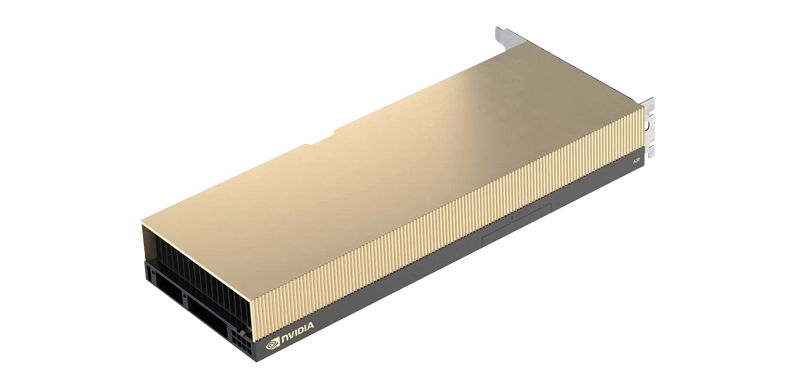

L40S

209

Ray Tracing Performance (TFLOPS)

1.4

Double Precision FP64 Performance (TFLOPS)

366

Single Precision FP32 Performance (TFLOPS)

733

Half Precision FP16 Performance (TFLOPS)

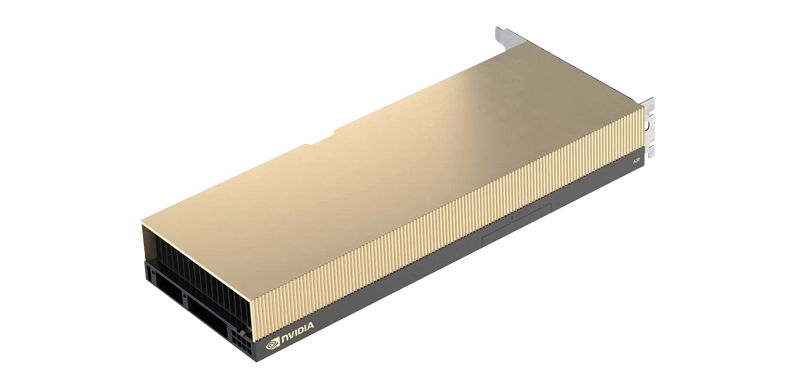

The L40S is the flagship datacentre GPU based on the Ada Lovelace architecture and is designed primarily for high-end graphics and AI workloads. It has the same overall configuration as the L40, with 18,176 CUDA cores, 528 4th gen Tensor cores, 142 3rd gen RT cores plus 48GB of ultra-reliable GDDR6 ECC memory. However, the L40S features improved Tensor cores which deliver double the performance of the L40 at TF32 and TF16, making it a far superior card for training and inferencing AI models.

CUDA

CUDA cores are the workhorse in Ada Lovelace GPUs, as the architecture supports many cores and accelerates workloads up to 1.5x (FP32) of the previous Ampere generation.

RAY TRACING

Ada Lovelace GPUs feature third generation RT cores delivering up up to double the real-time photorealistic ray-tracing performance of the previous generation GPUs.

DATA SCIENCE & AI

Fourth generation Tensor cores boost scientific computing and AI development with up to 3x faster performance compared to Ampere GPUs and support mixed floating-point acceleration.

VIEW RANGE