PRESSZONE

SCAN AI AI PROJECT PLANNING PART 7 OF 7 - GOVERNANCE

The previous part of this seven part guide dealt with the model integration required when AI algorithms scale to GPU-clusters, hybrid clouds and beyond, including data integrity and security concerns.

This final part will address the compliance and regulatory requirements that are becoming increasingly common in the AI industry, helping you be aware of impacts on your model development and deployment.

The EU AI act

The AI Act is a regulation establishing a common compliance and legal framework for AI within the European Union (EU). It came into force on 1 August 2024, with provisions coming into operation gradually over the following six to 36 months. It covers all types of AI across a broad range of sectors, with exceptions for military, national security, research and non-professional purposes. The Act classifies non-exempt AI applications by their risk of causing harm into four levels – unacceptable, high, limited, minimal – plus an additional category for general-purpose AI; as follows:

Unacceptable risk

AI applications in this category are banned, except for specific exemptions. When no exemption applies, this includes AI applications that manipulate human behaviour, those that use real-time remote biometric identification, such as facial recognition, in public spaces, and those used for social scoring - ranking individuals based on their personal characteristics, socio-economic status or behaviour.

High-risk

AI applications that are expected to pose significant threats to health, safety, or the fundamental rights of persons. Notably, AI systems used in health, education, recruitment, critical infrastructure management, law enforcement or justice. They are subject to quality, transparency, human oversight and safety obligations, and in some cases require a Fundamental Rights Impact Assessment before deployment. They must be evaluated both before they are placed on the market and throughout their life cycle.

Limited risk

AI applications in this category have transparency obligations, ensuring users are informed that they are interacting with an AI system and allowing them to make informed choices. This category includes AI applications that make it possible to generate or manipulate images, sound, or videos, such as deepfakes.

Minimal risk

AI applications that fall in this category require no regulation. It includes AI systems used for video games or spam filters.

General-purpose AI

AI applications in this category include foundation models such as ChatGPT. Unless the weights and model architecture are released under free and open source licence, in which case only a training data summary and a copyright compliance policy are required, they are subject to transparency requirements and potentially an evaluation process.

Early on in your AI project it would be wise to understand which of the above categories it falls in, to understand the degree of regulatory burden required or transparency expected.

82% of companies have already adopted or are exploring artificial intelligence (AI) solutions.

IBM AI Global Adoption Report, 2024

ISO 42001

The rapid growth of AI has brought up many ethical, privacy and security concerns. As a result, the International Organisation for Standardisation (ISO) and the International Electrotechnical Commission (IEC) have developed a new standard - ISO/IEC 42001:2023 or simply ISO 42001 - defined as “an international standard that specifies requirements for establishing, implementing, maintaining, and continually improving an artificial intelligence management system (AIMS) within organisations.” Attaining this accreditation demonstrates a business or organisation has trustworthy AI practices, as summarised below:

Transparency

Any decisions made using an AI system must be fully transparent and without bias or negative societal or environmental implications.

Accountability

To build user trust, organisations must hold themselves accountable by explaining the reasoning behind AI-related decisions.

Fairness

The inappropriate application of AI systems for automated decision-making should be assessed to make sure it is not unfair to specific people or groups.

Explainability

The explanations of important factors influencing the AI system results should be provided to interested parties in a way understandable to people.

Data Privacy

A comprehensive data management and security system is crucial for protecting user privacy in an AI ecosystem.

Reliability

AI systems must demonstrate a high degree of safety and reliability in all domains.

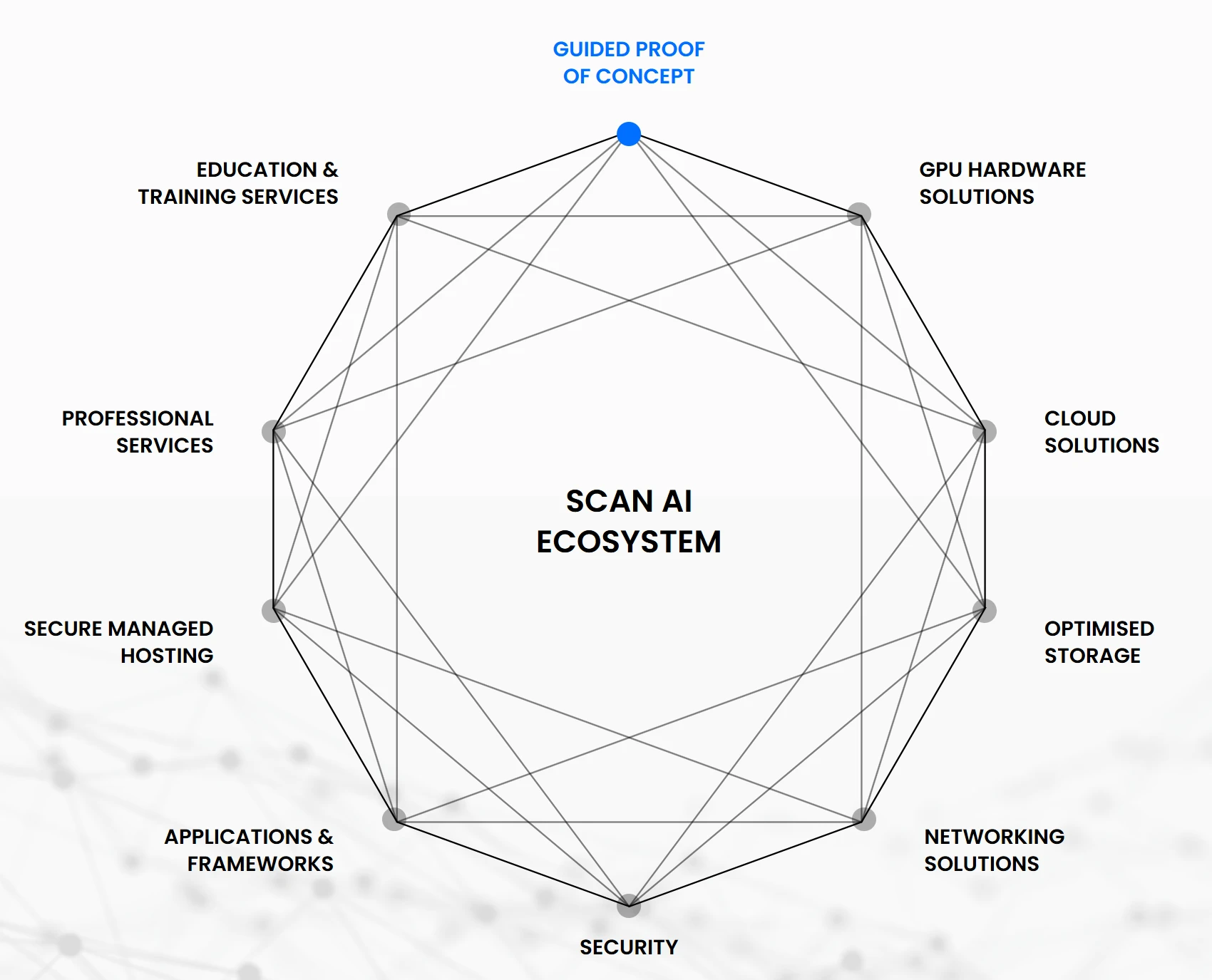

The Scan AI Ecosystem

We hope this guide has demonstrated that any AI project constantly evolves and is made up of numerous interlinking stages and processes that each and collectively determine success. To address this complex landscape, Scan has developed a comprehensive ecosystem of hardware, software and services to help you get the most from every aspect of your AI project.

We support your organisation every step of the way - from mindshare, first principles and proof of concept; to hardware audits, new system design and data science consultancy; through to infrastructure optimisation, cloud deployment, installation, configuration and post-deployment support services. We hope you found this guide useful in providing some guidance and suggested structure to your AI projects.

Read our 7 part AI Project Planning Guide

- Part 1 - Where do I start?

- Part 2 - Setting Expectations

- Part 3 - Data Preparation

- Part 4 - Model Development

- Part 5 - Model Training

- Part 6 - Model Integration

- Part 7 - Governance