FDL Europe is a public - private partnership between the European Space Agency (ESA), the University of Oxford, Trillium Technologies and leaders in commercial AI supported by Google Cloud, NVIDIA and Scan AI. FDL Europe works to apply AI technologies to space science, to push the frontiers of research and develop new tools to help solve some of the biggest challenges that humanity faces. These range from the effects of climate change to predicting space weather, from improving disaster response, to identifying meteorites that could hold the key to the history of our universe.

FDL Europe 2023 was a research sprint hosted by the European Space Agency Phi-Lab, taking place over a period of eight weeks. The aim is to promote rapid learning and research outcomes in a collaborative atmosphere, pairing machine learning expertise with AI technologies and space science. The interdisciplinary teams address tightly defined ‘grand challenge’ problems and the format encourages rapid iteration and prototyping to create meaningful outputs for the good of all humanity. This approach involves combining data from various sources, leveraging expertise from multiple fields and utilising advanced technologies to tackle complex challenges.

Foundational Model Adaptors for Disasters

Project Background

Large Language Models (LLMs) have proven adept at summarising knowledge but also exhibit emergent properties, where simple instructions can significantly change their behaviour. They can be adapted to unseen tasks by carefully constructing written-language ‘prompts’ in a process called ‘zero-shot’ or ‘single-shot’ learning. Research in to LLMs and foundation models is uncovering new capabilities daily, increasing their ability to automate complex tasks. The FDL Europe 2023 research team undertook a challenge to use LLMs to improve the curation, extraction, interpretation and dispatching of key insights regarding natural disasters, focusing on one of the most impactful disasters in the world - flooding.

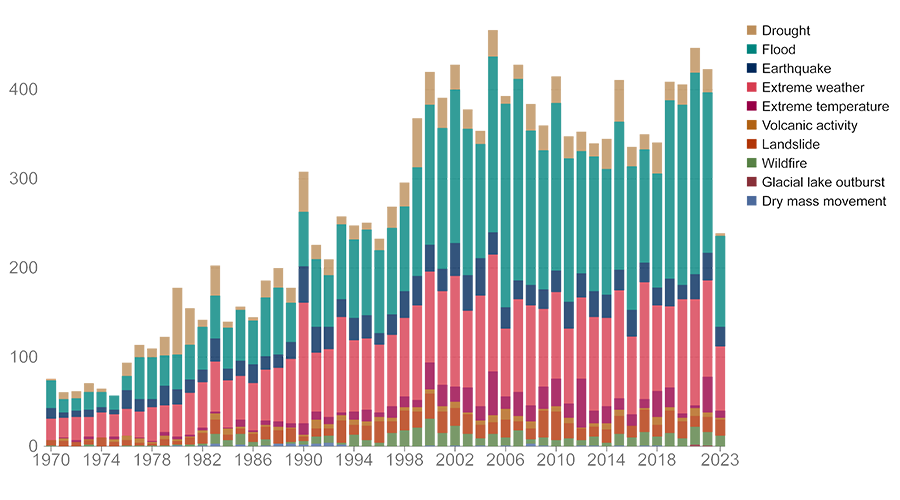

Floods are among the most devastating disasters around the globe. Between 2000 and 2019, over 1.65 billion people were affected by floods, comprising 41% of total people affected by weather-related disasters. Global climate change contributes to the increased likelihood of flooding due to the more extreme weather patterns it causes. The last twenty years alone have seen the number of major flood events more than double, from 1,389 to 3,254, with 24% of the world’s population exposed to floods. The exposure level to floods is especially high in low and middle-income countries, which are home to 89% of the world’s flood-exposed people. As global warming continues to escalate, the probability of encountering more severe weather events expands beyond the current high-risk areas. Therefore, the ability to respond well to an increasing number of disasters will be critical.

Data source: EM-DAT - Centre for Research on the Epidemiology of Disasters (CRED) / UCLouvain (2023)

When a disaster strikes, humanitarian agencies quickly mobilise to provide relief, using funds these agencies already have at their disposal. However, in most cases an appeal to broader partners, such as the United Nations or local governments, is required to create sympathy for the situation and orchestrate appeals for additional aid. A fundamental ingredient of these appeals is a situational report that highlights the cause, impact and context of the disaster, and highlights future work needed for recovery. Creating these flood reports in a timely manner is essential for dispatching sufficient aid as quickly as possible, but it is a labour-intensive and technical task that can be limited by several factors, such as time available to the report maker, skill level and access to the right data sources. To further complicate matters, disaster relief agencies across the world use a varied set of report structures and conditions and, given the specialised nature of these reports, only a fraction of events are analysed, limited by the manpower available in these organisations.

Project Approach

The research team investigated the usefulness and trustworthiness of LLMs to create a reporting solution that uses LLMs to ease the cognitive load required to write these flood reports. Since pre-trained LLMs have a temporal knowledge cut-off that does not include information pertaining to current events, simply asking questions via prompts will not produce satisfactory reports. Instead, the proposed interface needs to use trustworthy articles from the Internet to augment its input. It was decided that data would be collected from three major disaster response services: the Copernicus Emergency Management Service (EMSR), the Global Disaster Alert and Coordination System (GDACS) and ReliefWeb - the humanitarian information portal from the United Nations Office for the Coordination of Human Affairs (OCHA). These services use their own criteria for classifying flooding disasters, so as a result these separate sources provide good coverage of floods distributed globally and over time.

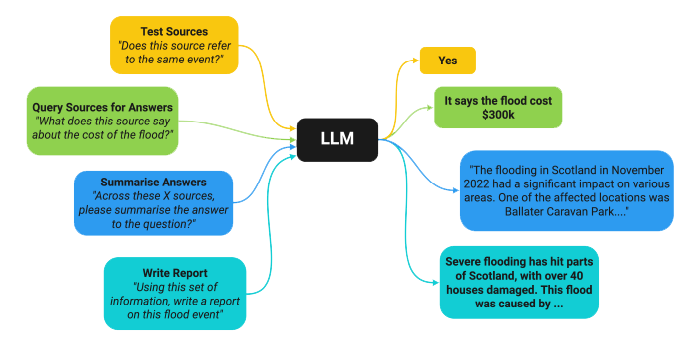

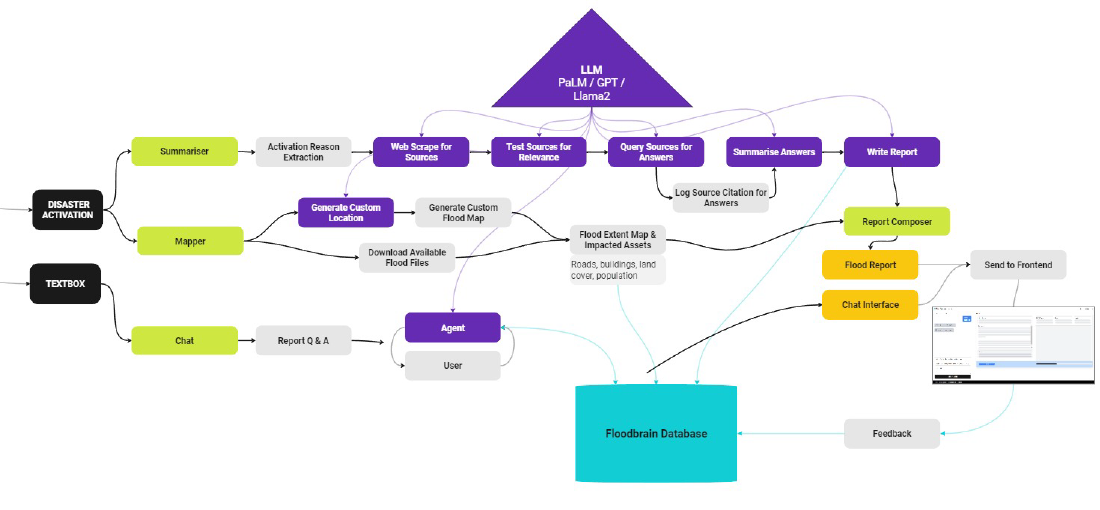

The above diagram summarises the flowchart of the process, the purple boxes representing the sections that can be assisted by LLMs. The report generation is started by defining a key phrase, which includes the date and the location of a flooding disaster, for example "Flooding Paraguay March 2023". This key phrase is then used to perform a web search to gather relevant websites from the EMSR, GDACS and OCHA sources, plus the wider Internet. Textual data is extracted from each website, and stored as a source for the pipeline. To gather more relevant sources the team then prompts an LLM (GPT-4 proved the most effective) to expand the original query into other relevant search queries, extracting further information about the flood event. Each source is passed as context to another LLM tasked with answering a set of questions to encapsulate key themes required of a flood report, as shown below.

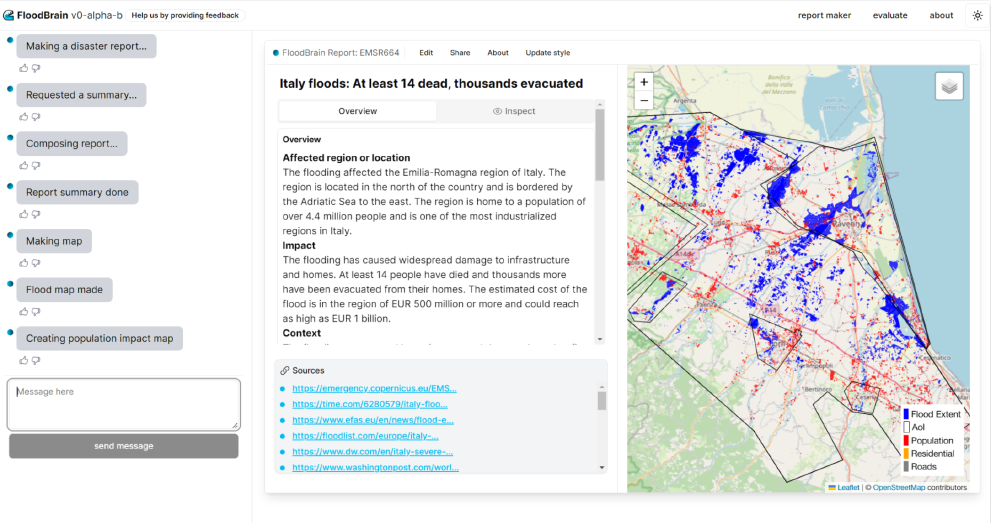

Trustworthiness is a crucial factor in flood reporting, necessitating a transparent approach to ensure effective utilisation of the reports. LLMs have been found to ‘hallucinate’ producing outputs that diverge from factual accuracy, thereby affecting their reliability and applicability in real-world scenarios. To mitigate the occurrence of hallucinatory output and uphold the integrity of information, the pipeline incorporates a citation-tracking methodology. This feature enables users to verify the data sources underpinning the generated report. For every source - question pairing, a text snippet from the source that answers the question is logged within the pipeline database. These are displayed in the ‘inspect’ tab of the report interface, organised by question.

The team also wanted to augment the textual long-form reports with a map, aiming to enrich the contextual understanding of the flood report by visually representing the flooded area alongside other relevant geospatial data. Several disaster response agencies offer mapping data in conjunction with their disaster charter activations. Whenever available, the team leveraged flood extent maps from these resources, in particular from Copernicus EMSR. In the absence of mapping data for certain flood events, they resorted to generating custom flood extent maps from satellite data. To achieve this, the locations of flooding from the report are geocoded so RGB satellite data can be downloaded from Google Earth Engine, filtering for the first image after the flood date with less than 30% cloud cover. After downloading this satellite image, they apply a pre-existing flood segmentation algorithm (ml4floods) to delinate the flooding extent. The flood map is displayed alongside further data from supporting open sources such as OpenStreetMap and WorldPop, facilitating a more comprehensive picture of the disaster’s impact, including affected population density, points of interest and infrastructure locations. This extra information contribute to a more nuanced analysis and response.

In addition to the report and accompanying mapping results, a custom chat interface tool was also developed to enable interrogation of the collected knowledge. Based on a ReAct reasoning system, the tool is provided with the data sources collected while generating the report, so users can then interact via a text interface and ask questions regarding the report. This complete pipeline, called FloodBrain, is illustrated in the diagram above.In addition to the report and accompanying mapping results, a custom chat interface tool was also developed to enable interrogation of the collected knowledge. Based on a ReAct reasoning system, the tool is provided with the data sources collected while generating the report, so users can then interact via a text interface and ask questions regarding the report. This complete pipeline, called FloodBrain, is illustrated in the diagram above.

Project Results

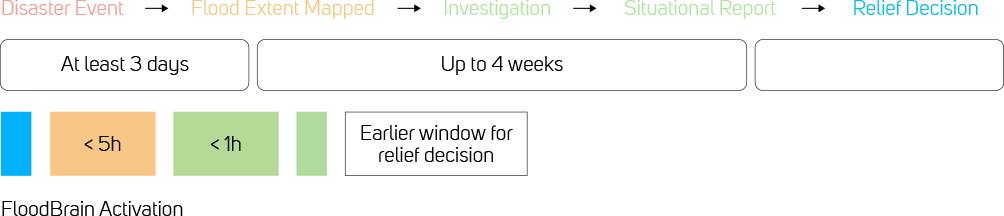

Accelerating access to vital flood reporting data and maps could be key in reducing response times as new events become more commonplace. Not only does this LLM-based approach provide rapid insight, it also delivers correlation of wider data sources, offering better visibility of potential geospatial impacts. This information and system combined could reduce emergency and agency relief action significantly, as illustrated below

The FloodBrain pipeline and database developed by the FDL Europe research team has resulted in a tool that facilitates fast and accurate flood reporting - ideal for humanitarian organisations that are seeking information about major flooding events around the globe. A screen shot / video of the user interface, available at floodbrain.com, is shown below, demonstrating the written report and visual mapping side by side.

Next Steps

FloodBrain is currently limited to reporting on flooding events defined as disasters by external agencies. Given the lack of publicly shared methodology for what warrants an investigative report, there is a risk of overlooking flooding events in areas of the world less attended to by these agencies. The FDL Europe team hopes to extend FloodBrain beyond these previously defined floods to improve access to flood reporting for these underreported communities.

You can learn more about this case study by visiting the FDL EUROPE 2023 RESULTS PAGE, where a summary, poster and full technical memorandum can also be viewed and downloaded.

The Scan Partnership

Scan is a major supporter of FDL Europe, building on its participation in the previous three years events. As an NVIDIA Elite Solution Provider Scan contributes multiple DGX supercomputers in order to facilitate much of the machine learning and deep learning development and training required during the research sprint period.

Project Wins

Improvements in fast and accurate flood reporting by means of the FloodBrain online tool, providing rapid information for response teams

Time savings generated during eight-week research sprint due to access to GPU-accelerated DGX systems

Cormac Purcell

Chief Scientist, Trillium Technologies

"Our partners Scan AI have been essential to solving the grand challenges in FDL Europe, like creating a world leading SAR processing pipeline. Such cutting-edge research can only be done with the fast and scalable GPU computing power and rapid access storage – technology mastered by Scan AI. We are very grateful for Scan AI’s excellent support and look forward to seeing what we can achieve in the future."

Dan Parkinson

Director of Collaboration, Scan

"We are proud to work with NVIDIA to support the FDL Europe research sprint with GPU-accelerated systems for the fourth year running. It is a huge privilege to be associated with such ground-breaking research efforts in light of the challenges we all face when it comes to climate change and extreme weather events."

Speak to an expert

You’ve seen how Scan continues to help FDL Europe further its research into the climate change and space. Contact our expert AI team to discuss your project requirements.m

phone_iphone Phone: 01204 4747210

mail Email: [email protected]