FDL Europe is a public - private partnership between the European Space Agency (ESA), the University of Oxford, Trillium Technologies and leaders in commercial AI supported by Google Cloud, NVIDIA and Scan AI. FDL Europe works to apply AI technologies to space science, to push the frontiers of research and develop new tools to help solve some of the biggest challenges that humanity faces. These range from the effects of climate change to predicting space weather, from improving disaster response, to identifying meteorites that could hold the key to the history of our universe.

FDL Europe 2023 was a research sprint hosted by the European Space Agency Phi-Lab, taking place over a period of eight weeks. The aim is to promote rapid learning and research outcomes in a collaborative atmosphere, pairing machine learning expertise with AI technologies and space science. The interdisciplinary teams address tightly defined ‘grand challenge’ problems and the format encourages rapid iteration and prototyping to create meaningful outputs for the good of all humanity. This approach involves combining data from various sources, leveraging expertise from multiple fields and utilising advanced technologies to tackle complex challenges.

Generalisable SSL SAR

Project Background

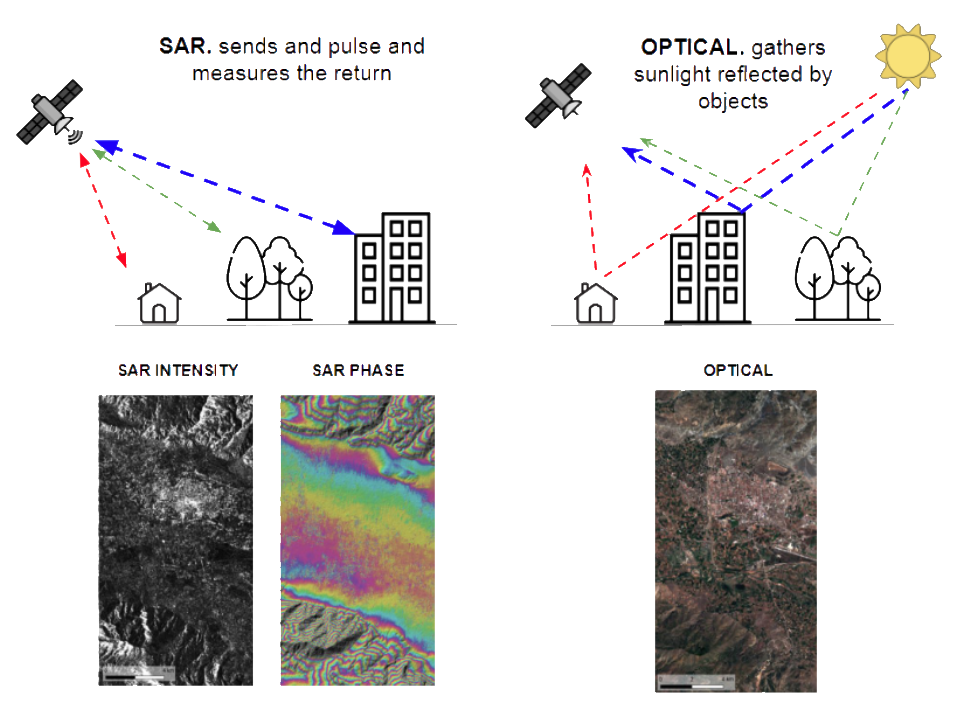

Synthetic Aperture Radar (SAR) technology is a type of active scanning from orbiting satellites where a radio waves are transmitted to the ground and a receiver on the satellite records the amount of that energy reflected back after interacting with the Earth. It is different to optical observations based on reflected sunlightas it can penetrate through clouds and can detect millimetre-level height changes on the ground through interferometric processing. SAR data is complex and requires significant processing to make sense of. However, machine learning (ML) methods – especially self-supervised learning (SSL) – are demonstrating great potential for delivering rapid insight in dynamic scenarios such as landslides and other emergencies. The current crop of ML-driven computer vision models are not easily adapted to complex SAR data, so there is a pressing need for new machine learning (ML) methods that can effectively analyse information across multiple locations and use-cases, and make full use of the amplitude, phase and polarisation information present.

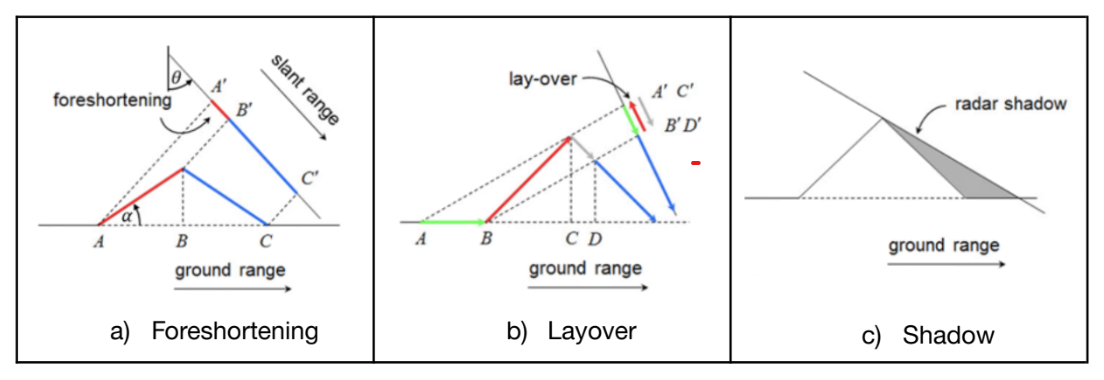

During FDL US 2022, the Disaster Response team developed ‘DeepSlide’ - an end-to-end machine learning pipeline designed to leverage the true potential of SAR data to detect and characterize landslides across a wide area after an emergency event, such as a hurricane or earthquake. This would allow emergency response decisions on resource distribution to be taken at the next SAR satellite overpass, regardless of cloud cover and atmospheric conditions. DeepSlide created ML-ready datasets for landslide detection in four regions particularly affected - Puerto Rico, Japan, Indonesia and New Zealand. However, during this work it became apparent that unique topologies and the nature of side-looking radar generated distortion phenomena such as foreshortening, layover and shadowing are all serious as illustrated in the diagrams below. These issues result in a lack of model generalisability that pose a hurdle to developing SSL SAR techniques that are easily adaptable to multiple use-cases.

To meet this challenge, the FDL Europe 2023 research team proposed to build a precursor foundation model (FM) trained on large amounts of unlabelled data (using self-supervision at scale). Based on SAR data and complementary datasets, such as digital elevation maps, a Foundation Model could, in theory, be adapted to a wide range of downstream Earth observation tasks, enabling improved performance, lower requirements for labeled data and radically improved generalisation. Additionally, this would leverage much of the SSL techniques already developed by the FDL US team for DeepSlide and allow it to be used for multiple use-cases and geographies.

Project Approach

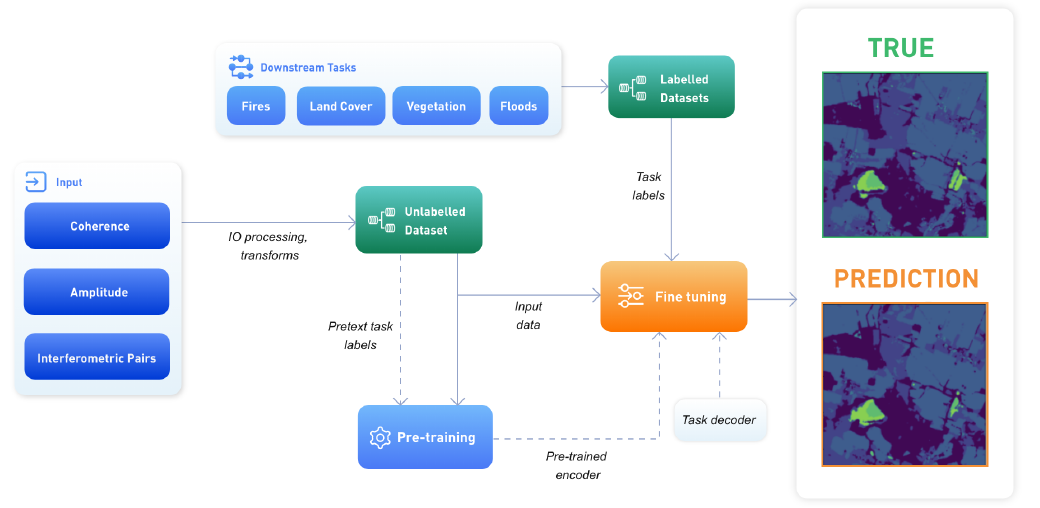

The main goal of this challenge was to study the feasibility of precursor Foundation Models for analysing SAR data - where the term precursor refers to smaller model than a true Foundation Model, but one that allows the assessment of the limitations and advantages of a large model trained with extremely diverse data from different geographical areas and times. To achieve this, the team used a wealth of unlabelled SAR data as the initial input, fed into the models during pre-training. These were derived from the ARIA Sentinel-1 Geocoded Unwrapped Interferograms dataset (GUNW4), which included amplitude, coherence, and unwrapped phase; the Global Seasonal Sentinel-1 Interferometric Coherence and Backscatter dataset (GSSIC5), which included seasonal summaries of the amplitude and coherence; and the Sentinel-1 GRD dataset. Then labelled data for a variety of downstream tasks was added: vegetation cover, land cover, floods extent, fire scars and building shapes. This created a final dataset of around 40TB of ML-ready data, covering six geographical regions and consisting of co-aligned tiles of SAR input data, including SAR amplitude, coherence, and interferometry, plus the data for downstream tasks, as shown below.

Once the pipeline backbone was pre-trained, the team fine-tuned the model through supervised pairing between the initial SAR input and the labelled data required for a specific downstream task. An overview of the complete machine learning pipeline is illustrated below.

To fully evaluate different precursor Foundation Model architectures, a number of the best performing, state-of-the-art models were tested in the unlabelled pre-training phase - MAE, DINO, VICReg and CLIP. These models were classified into two types, depending on whether they take single or multi-input data, as shown below.

Single Input Models

Masked Autoencoders (MAE)

MAEs mask random patches of the input image and learn to reconstruct the missing pixels. MAEs are built as asymmetric encoder-decoder architectures, with an encoder that operates only on the visible subset of patches (without mask tokens), along with a lightweight decoder that reconstructs the original image from the latent representation and mask tokens.

Distillation with No Labels (DINO)

DINO passes two different random transformations of an input image to the student and teacher networks. Both networks have the same architecture but different parameters. The output of the teacher network is centered with a mean computed over the batch. Their similarity is then measured with a cross-entropy loss. The teacher parameters are updated with an exponential moving average of the student parameters.

Multi Input Models

Variance-Invariance-Covariance Regularization (VICReg)

VICReg uses two backbones as encoders - the same architecture or different ones. In the simplest case one single image is fed as input to one of the backbones and an augmentation of that image is passed to the other. The model is trained with a loss function that combines Variance - forcing the embedding vectors of samples within a batch to be different; Invariance - distance between the embedding vectors; and Covariance - decorrelates the variables of each embedding and prevents them to be highly correlated.

Contrastive Language-Image Pre-training (CLIP)

CLIP is an SSL method that constrastively learns how to align the representations of image / text pairs collected from the Internet, allowing to deal with different modalities of the same reality within the same embedding space for a variety multi-modal applications including detection, captioning, VQA and conditional image generation among others. CLIP's architecture, heavily based on vision transformers has been applied to marge multimodal representations beyond text and in different domains.

Project Results

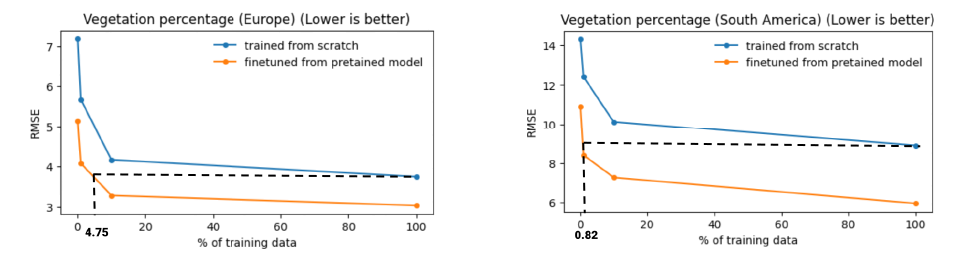

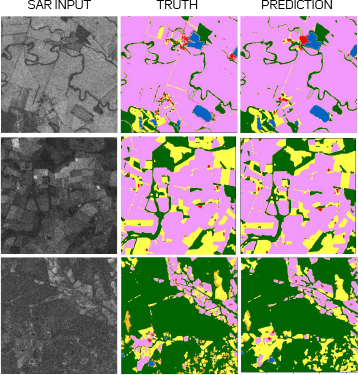

The FDL Europe team deployed four different models - MAE, DINO, VICReg and CLIP across four tasks - vegetation prediction, land cover classification, built surface measurement and fire scar detection. In all the cases they were able to explore self-supervised pre-training that gave a consistent improvement in performance. The effect of pre-training was strongest for MAE, where the pre-trained model required fewer than 1% of the vegetation labels to match the fully supervised, randomly initialised model, as shown below. This efficiency in label use represents a paradigm shift in the use of deep learning for SAR data, enabling the deployment of easily customisable SAR foundation models by end-users.

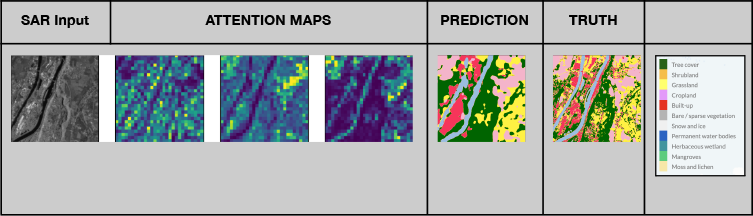

One of the four models, DINO, produced an additional useful output - attention maps - a visual explanation of a deep neural network. The attention maps exhibit emergent segmentation of salient features in SAR imagery, despite requiring no ground truth labels and lead to high quality predictions, as illustrated below.

The VICReg model showed that SAR foundation models have great potential to improve performance in change detection tasks. Given the particular strengths of SAR - all-weather, day-night operation - it is particularly important that SAR foundation models are able to address these tasks. This is crucial for applications such as natural disaster monitoring and management, which are extremely time sensitive and require immediate, reliable data for interventions to be effective.

Finally, the team examined the embedding space generated by the model encoders using CLIP, and found that identified regions were interpretable with respect to the downstream labels - showing clear separation between different physical characteristics, as illustrated above. This offers a shortcut to evaluate transferability of models to new regions without the need for retraining - by examining distribution shift within the embedding space. This adaptability is crucial for the deployment of a global SAR foundation model, as perfect coverage for all instrument modes cannot be expected.

You can learn more about this case study by visiting the FDL EUROPE 2023 RESULTS PAGE, where a summary, poster and full technical memorandum can also be viewed and downloaded.

The Scan Partnership

Scan is a major supporter of FDL Europe, building on its participation in the previous three years events. As an NVIDIA Elite Solution Provider Scan contributes multiple DGX supercomputers in order to facilitate much of the machine learning and deep learning development and training required during the research sprint period.

Project Wins

Demonstrated SAR foundation models to have great potential to improve performance in change detection tasks

Time savings generated during eight-week research sprint due to access to GPU-accelerated DGX systems

Cormac Purcell

Chief Scientist, Trillium Technologies

"Our partners Scan AI have been essential to solving the grand challenges in FDL Europe, like creating a world leading SAR processing pipeline. Such cutting-edge research can only be done with the fast and scalable GPU computing power and rapid access storage – technology mastered by Scan AI. We are very grateful for Scan AI’s excellent support and look forward to seeing what we can achieve in the future."

Dan Parkinson

Director of Collaboration, Scan

"We are proud to work with NVIDIA to support the FDL Europe research sprint with GPU-accelerated systems for the fourth year running. It is a huge privilege to be associated with such ground-breaking research efforts in light of the challenges we all face when it comes to climate change and extreme weather events."

Speak to an expert

You’ve seen how Scan continues to help FDL Europe further its research into the climate change and space. Contact our expert AI team to discuss your project requirements.m

phone_iphone Phone: 01204 4747210

mail Email: [email protected]