GPU Hardware Solutions

GPU-accelerated systems for AI model development, training and inferencing

Demanding workloads such as deep learning, machine learning and AI can only be efficiently delivered by GPU-accelerated systems - featuring one or more GPUs, depending on the stage you are at in the pipeline - development, training or inferencing. The Scan AI Ecosystem addresses all these scenarios with a range of 3XS development workstations, 3XS custom servers and NVIDIA DGX appliances. As an NVIDIA Elite Partner you can be confident all our designs and configurations are tried, tested and in many cases certified by NVIDIA to ensure we deliver the best performance whilst remaining cost-effective.

3XS AI Development Workstations

AI development workstations designed and built by 3XS Systems make starting your AI journey easy. Powered by NVIDIA GPUs, they provide data scientists with a cost-effective platform for developing AI models. Designed for office environments, 3XS AI workstations are fine-tuned by our hardware engineers for performance, reliability and importantly, low-noise, so they can be used in office environment. They come complete with a custom software stack meaning you can spend more time on your code development and less time configuring drivers, libraries and APIs.

FIND OUT MORE >

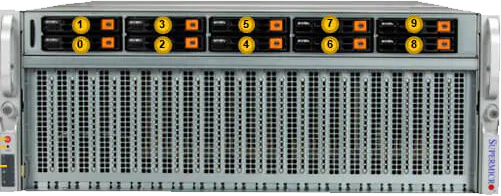

3XS EGX Custom AI Servers

Using a custom designed training system for deep learning and AI workloads gives you the ultimate control. Choosing the ideal specification for your projects lets you build in flexibility and scalability as required. Every 3XS Systems EGX custom training server is almost infinitely configurable with NVIDIA PCIe GPUs, AMD or Intel CPUs, memory, storage, right through to connectivity, power, cooling and software.

FIND OUT MORE >

3XS HGX Custom AI Servers

Using a custom designed training system for deep learning and AI workloads gives you the ultimate control. Choosing the ideal specification for your projects lets you build in flexibility and scalability as required. Every 3XS Systems HGX custom training server is almost infinitely configurable with NVIDIA SXM GPUs, with AMD or Intel CPUs, memory, storage, right through to connectivity, power, cooling and software.

FIND OUT MORE >

3XS MGX Custom AI Servers

Using a custom designed training system for deep learning and AI workloads gives you the ultimate control. Choosing the ideal specification for your projects lets you build in flexibility and scalability as required. Every 3XS Systems MGX custom training server is almost infinitely configurable with NVIDIA Grace SuperChips, NVIDIA PCIe GPUs, Intel CPUs, memory, storage, right through to connectivity, power, cooling and software.

FIND OUT MORE >

NVIDIA DGX B200 AI Appliance

The sixth-generation DGX AI appliance is built around the Blackwell architecture and the flagship B200 GPU, providing unprecedented training and inferencing performance in a single system. The DGX B200 is a complete AI solution supported by the NVIDIA Base Command management suite and the NVIDIA AI Enterprise software stack, backed by specialist technical advice from NVIDIA DGXperts.

FIND OUT MORE >

NVIDIA DGX H100 and DGX H200 Appliances

These fifth-generation DGX AI appliances are built around the Hopper architecture and the H100 or H200 GPU, providing outstanding training and inferencing performance in a single system. These DGX’s are a complete AI solution supported by the NVIDIA Base Command management suite and the NVIDIA AI Enterprise software stack, backed by specialist technical advice from NVIDIA DGXperts.

FIND OUT MORE >

POD Architectures

POD are reference architectures made up of multiple GPU-accelerated servers combined with optimised storage and connected by low-latency networking - all managed by a comprehensive software layer. There is a vast variety of ways in which POD infrastructure solutions can be configured including a Scan POD, based on NVIDIA EGX and HGX server configurations; an NVIDIA BasePOD including made up of 2-40 DGX H100, H200 or B200 appliances; an NVIDIA SuperPOD consisting of up to 140 DGX H100, H200 or B200 appliances centrally controlled with NVIDIA Unified Fabric Manager; and an NVIDIA DGX GB200 NVL72 Exascale appliance designed exclusively for LLMs and generative AI.

FIND OUT MORE >

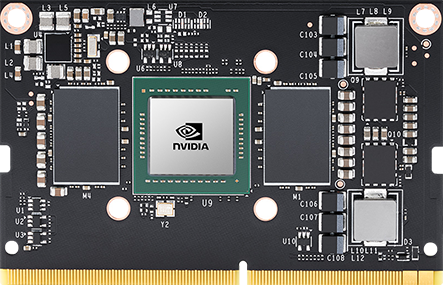

NVIDIA Embedded Solutions

NVIDIA Jetson is the world's leading platform for embedded applications, comprising small form-factor, high-performance computer modules, the NVIDIA JetPack SDK (Software Development Kit) for accelerating AI inferencing workloads, and an ecosystem comprised of sensors, services, and third-party products to speed up development. Every 3XS Jetson inferencing system is highly configurable and expandable to address the unique operational environment you are using - from the smallest form factors to weatherproof and ruggedised solutions to cope with any environment.

FIND OUT MORE >

To help you choose which hardware solutions are the best fit for your organisation and its AI strategy, any of our systems can be trialled in our GUIDED PROOF OF CONCEPT environment.

Alternatively contact our AI experts on the details below.