High performance GPU-accelerated server and AI-optimised storage systems need an equally high-performance network infrastructure to support them, and ensure there are no bottlenecks that effect data throughput. Whether located within a datacentre or at the edge of the network, connectivity is key to feeding increasingly large datasets rapidly to GPUs, so they are fully utilised at all times, and ROI is maximised across your entire AI infrastructure.

As an NVIDIA Elite Partner and the UK's only NVIDIA DGX-certified Managed Services Partner, Scan is ideally placed to be your trusted advisor for all your networking infrastructure needs. We offer the full range of NVIDIA Networking solutions, designed to work seamlessly alongside NVIDIA GPU-accelerated compute systems - explore the tabs below to learn more.

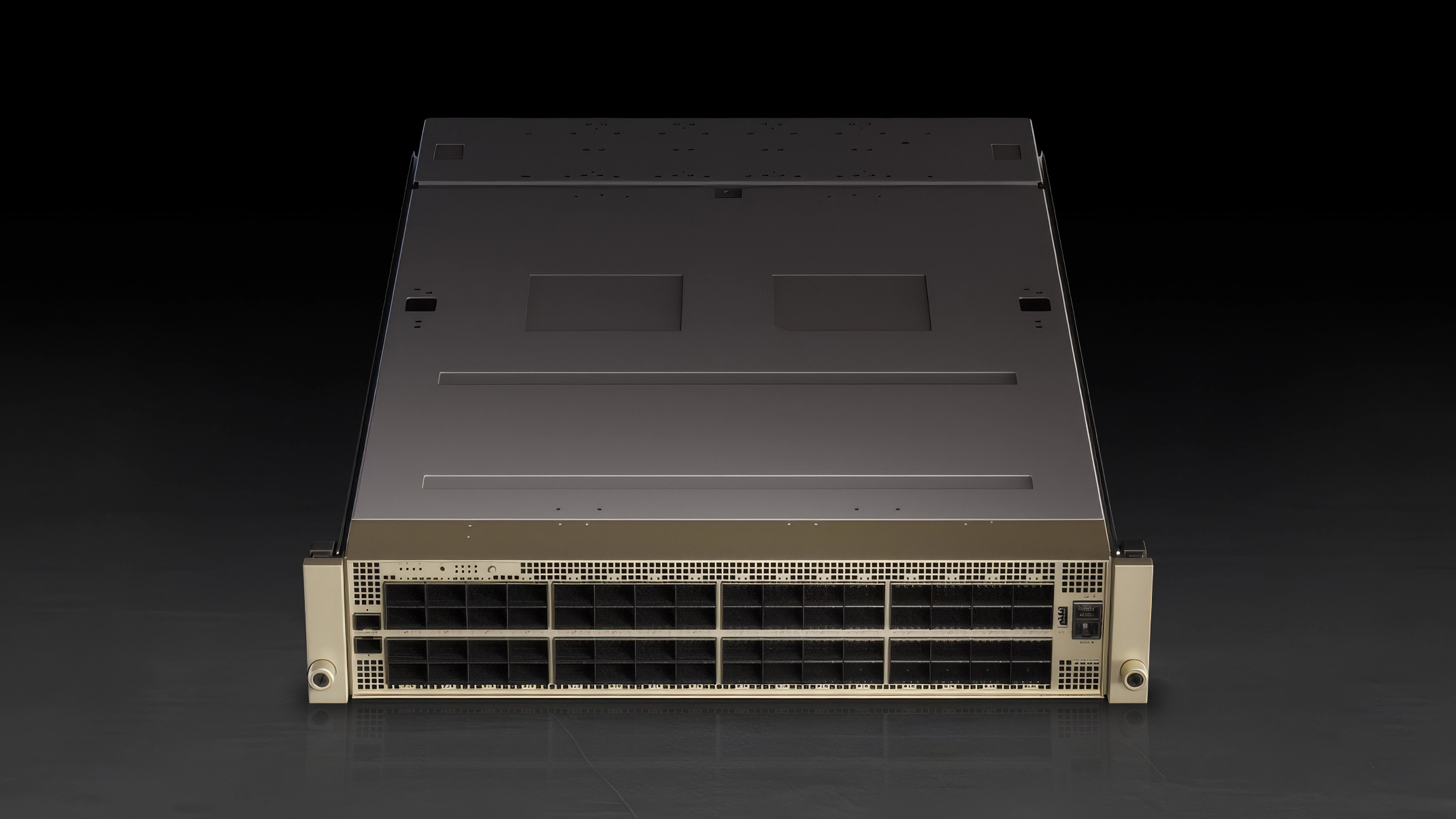

Quantum InfiniBand Switches

NVIDIA Quantum InfiniBand switches are the gold standard for demanding AI workloads, as both the hardware and InfiniBand network protocol have been designed from the ground up to provide lossless low-latency, high-throughput connections between InfiniBand-equipped GPU-accelerated servers and storage arrays. There are several generations of Quantum switches, each defined by greater speeds and more advanced feature sets.

Quantum X800 Switches

NVIDIA Quantum X800 switches deliver 144 ports of 800Gb/s of end-to-end connectivity with ultra-low latency - purpose-built for trillion-parameter-scale agentic and physical AI models. These switches feature in-network compute acceleration technologies such as NVIDIA SHARP v4 (Scalable Hierarchical Aggregation and Reduction Protocol), which offload collective communications (from AI training applications) from CPUs and GPUs directly onto the Quantum InfiniBand network. Some models also feature co-packaged optics, replacing pluggable transceivers with silicon photonics on the same die as the ASIC. This innovation provides 3.5x better power efficiency and 10x higher network resiliency.

VIEW SWITCH RANGE

Quantum-2 Switches

NVIDIA Quantum-2 switches deliver up to 64 ports 400Gb/s of end-to-end connectivity with ultra-low latency - purpose-built for training and deploying LLMs and generative AI models. These switches feature in-network compute acceleration technologies including NVIDIA SHARP v3, which offload collective communications (from AI training applications) from CPUs and GPUs directly onto the Quantum InfiniBand network. Both internally managed and externally managed versions are available.

VIEW SWITCH RANGE

Quantum Switches

NVIDIA Quantum switches deliver up to 40 ports of 200Gb/s of end-to-end connectivity with low latency - purpose-built for training and deploying advanced AI models. These switches feature in-network compute acceleration technologies like NVIDIA SHARP v2, which offload collective communications (from AI training applications) from CPUs and GPUs directly onto the Quantum InfiniBand network. Both internally managed and externally managed versions are available.

VIEW SWITCH RANGE

NVIDIA Quantum Switch Specification Summary

| Family | QUANTUM X800 | QUANTUM-2 | QUANTUM | |||||

|---|---|---|---|---|---|---|---|---|

| Model | Q3200-RA | Q3400-RA | Q3401-RD | Q3450-LD | QM9700 | QM9790 | QM8700 | QM8790 |

| Ports | 36x OSFP | 72x OSFP | 72x OSFP | 144x MPO | 32x OSFP | 32x OSFP | 40x QSFP56 | 40x QSFP56 |

| Maximum Speed | 72x XDR 800G | 144x XDR 800G | 144x XDR 800G | 144x XDR 800G | 64x NDR 400G | 64x NDR 400G | 40x HDR 200G | 40x HDR 200G |

| OS | UFM | UFM | UFM | UFM | MLNX-OS | UFM | MLNX-OS | UFM |

| Management | External | External | External | External | Internal | External | Internal | External |

| Cooling | Air | Air | Air | Liquid / Air | Air | Air | Air | Air |

| Secure Boot | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✗ | ✗ |

To learn more about InfiniBand speeds, port types and Quantum switch specifications, read our NVIDIA NETWORKING BUYERS GUIDE.

OS and Management

Internally managed models come configured with MLNX-OS, a switch operating system built specifically for datacentres with high-performance computing and cloud fabrics, coupled to enterprise storage. Building networks with MLNX-OS enables scaling to thousands of compute and storage nodes with monitoring and provisioning capabilities.

Externally managed models are designed to be controlled with NVIDIA Unified Fabric Manager (UFM). This platform revolutionises datacentre network management by combining enhanced, real-time network telemetry with AI-powered cyber intelligence and analytics to support scale-out, InfiniBand-connected infrastructures. As UFM is also included in the software stack of NVIDIA DGX SuperPOD solutions, it is designed as a single universal management layer for your entire GPU-accelerated cluster.

Spectrum Ethernet Switches

NVIDIA Spectrum Ethernet switches are the gold standard for scaling network capacity and delivering low-latency, high-throughput connections between Ethernet-equipped GPU-accelerated servers and storage arrays for HPC and AI workloads. There are several generations of Spectrum switches, each defined by greater speeds and more advanced feature sets.

Spectrum-6 Switches

The NVIDIA Spectrum-6 platform delivers up to 512 ports of 800Gb/s of end-to-end connectivity with ultra-low latency - purpose-built for trillion-parameter-scale agentic and physical AI models. These switches feature advanced technologies including multi-chassis link aggregation group (MLAG) for active/active L2 multi-pathing and 256-way equal-cost multi-path (ECMP) routing for load balancing and redundancy. The models will feature co-packaged optics, replacing pluggable transceivers with silicon photonics on the same die as the ASIC. This innovation provides 3.5x better power efficiency and 10x higher network resiliency.

COMING SOON

Spectrum-4 Switches

NVIDIA Spectrum-4 switches deliver up to 64 ports of 800Gb/s of end-to-end connectivity with ultra-low latency - purpose-built for trillion-parameter-scale agentic and physical AI models. These switches feature advanced technologies including MLAG for active/active L2 multi-pathing and 256-way ECMP routing for load balancing and redundancy. Both internally managed and externally managed versions are available.

VIEW SWITCH RANGE

Spectrum-3 Switches

NVIDIA Spectrum-3 switches deliver up to 32 ports of 400Gb/s of end-to-end connectivity with ultra-low latency - purpose-built for LLMs and generative AI models. These switches feature advanced technologies including MLAG for active/active L2 multi-pathing and 128-way ECMP routing for load balancing and redundancy. Both internally managed and externally managed versions are available.

VIEW SWITCH RANGE

NVIDIA Switch Specification Summary

| Family | Spectrum-6 | Spectrum-4 | Spectrum-3 | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Model | SN6800-LD | SN6810-LD | SN6600-LD | SN5610 | SN5600 | SN5600D | SN5400 | SN4700 | SN4600C |

| Ports | 512x MPO | 128x MPO | 64x OSFP | 64x OSFP | 64x OSFP | 64x OSFP | 64x QSFP56-DD | 32x QSFP56-DD | 64x QSFP28 |

| Maximum Speed | 512x 800GbE | 128x 800GbE | 128x 800GbE | 64x 800GbE | 64x 800GbE | 64x 800GbE | 64x 400GbE | 32x 200GbE | 64x 100GbE |

| OS | Cumulus Linux | Cumulus Linux | Cumulus Linux | Cumulus Linux | Cumulus Linux | Cumulus Linux | Cumulus Linux | Cumulus Linux | Cumulus Linux |

| Management | Internal / External | Internal / External | Internal / External | Internal / External | Internal / External | Internal / External | Internal / External | Internal / External | Internal / External |

| Cooling | Liquid / Air | Air | Air | Air | Air | Air | Air | Air | Air |

| Secure Boot | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✗ | ✗ |

To learn more about Ethernet speeds, port types and Spectrum switch specifications, read our NVIDIA NETWORKING BUYERS GUIDE.

OS and Management

All models run on pre-configured Cumulus Linux providing standard networking functions such as bridging and routing, plus advanced features including automation, orchestration, monitoring and analytics. It also includes NVIDIA Air - a tool that makes physical deployments seamless by validating and simplifying deployments and upgrades in a digital twin virtual network environment.

The switches can also be configured with alternative OS software such as SONiC. It's containerised design makes it flexible and customisable, allowing customers to combine and manage SONiC and non-SONiC switches within the same networking fabric. NVIDIA's SONiC offering removes distribution limitations and enables enterprises to take full advantage of the benefits of open networking while adding the NVIDIA expertise and support that best guarantee success.

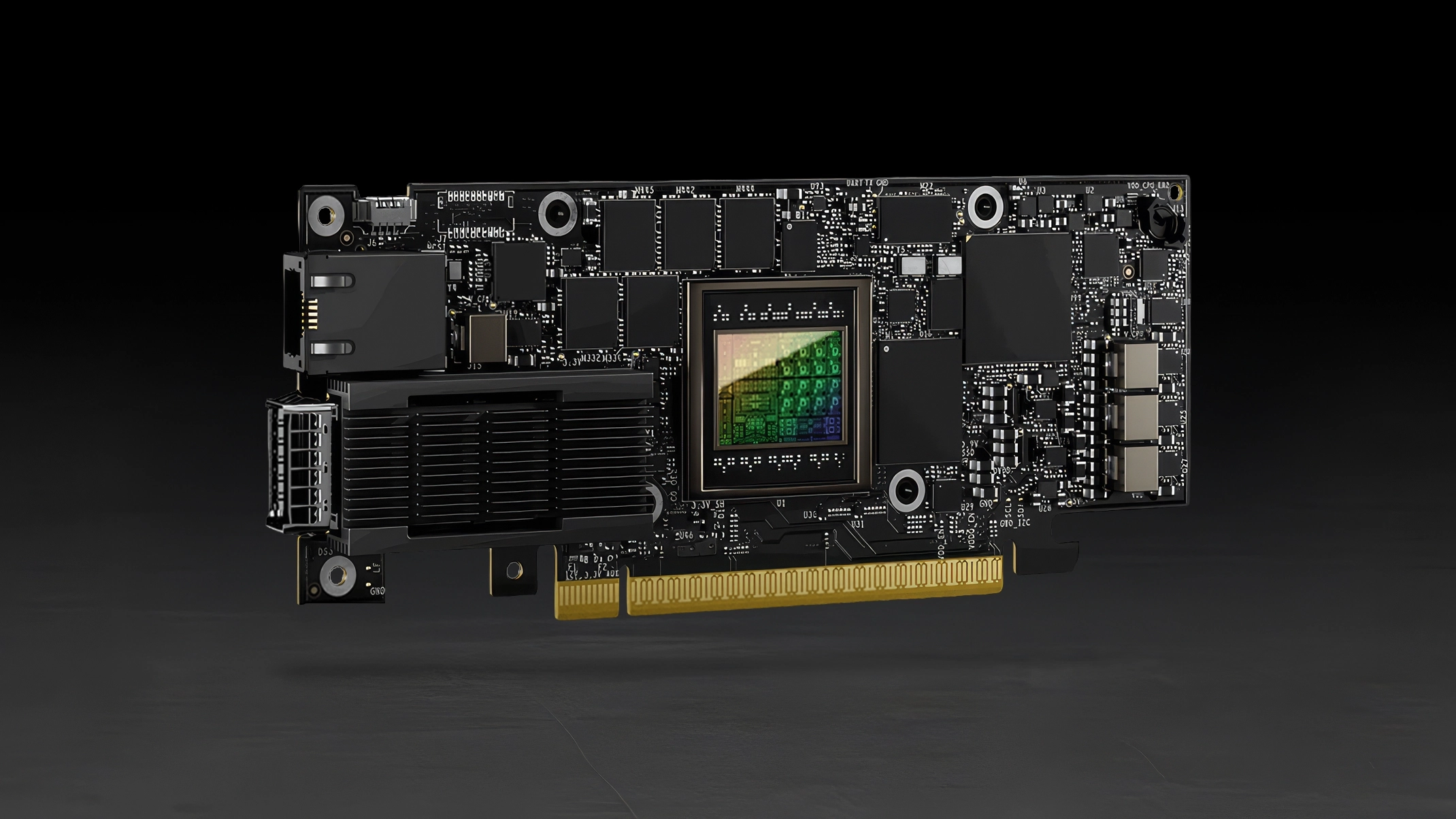

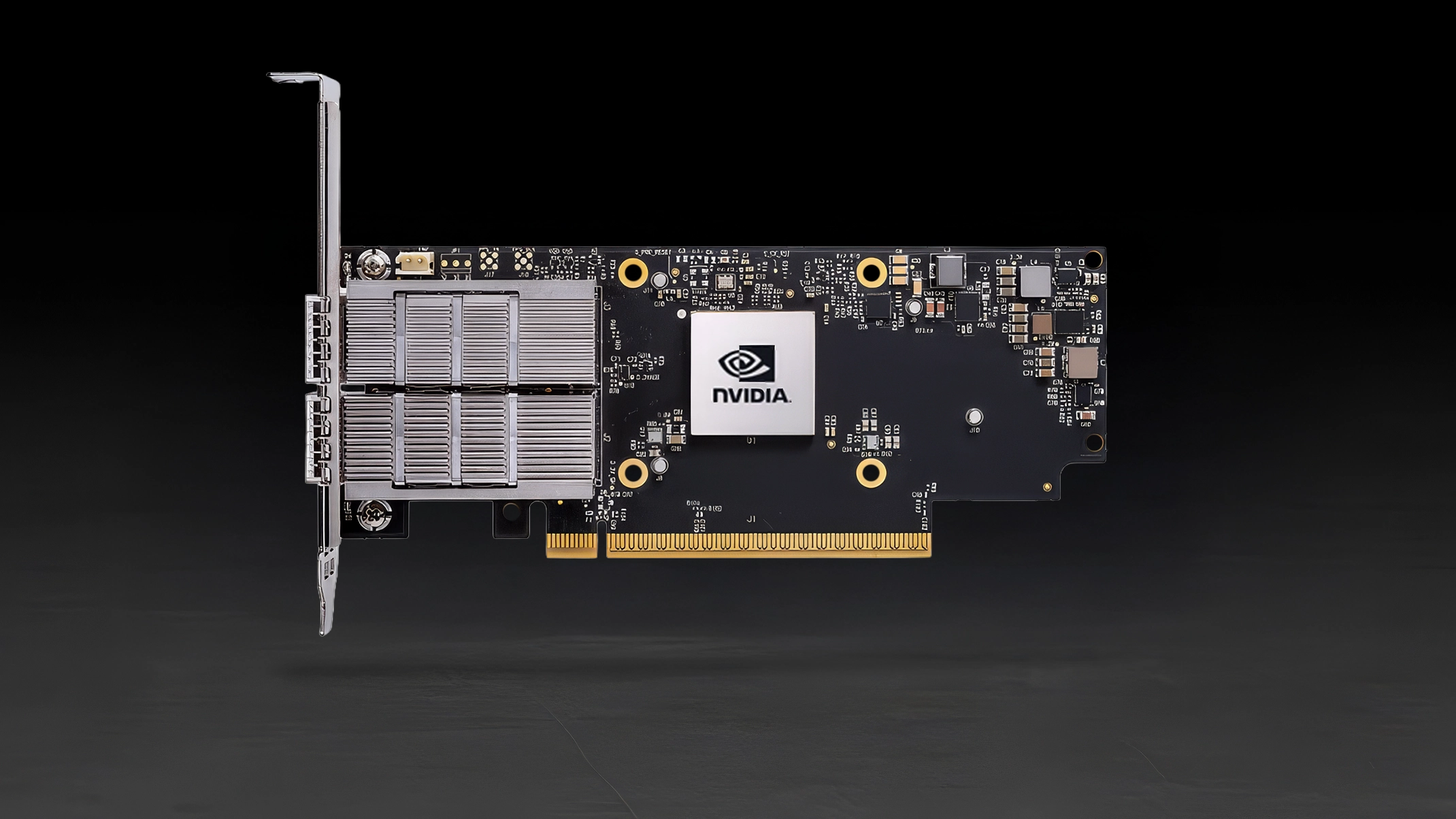

Smart NICs, Super NICs and DPUs

NVIDIA ConnectX Super NICs and Smart NICs, alongside BlueField DPUs offer network connectivity to your GPU-accelerated servers and AI-optimised storage arrays. They are designed to match the performance and feature sets of NVIDIA Quantum and Spectrum switches, are available in both InfiniBand and Ethernet versions, supporting remote direct memory access (RDMA) or RDMA-over-Converged Ethernet (RoCE) to remove as much latency by removing CPU requirements. Learn more about the different network cards by watching the video below.

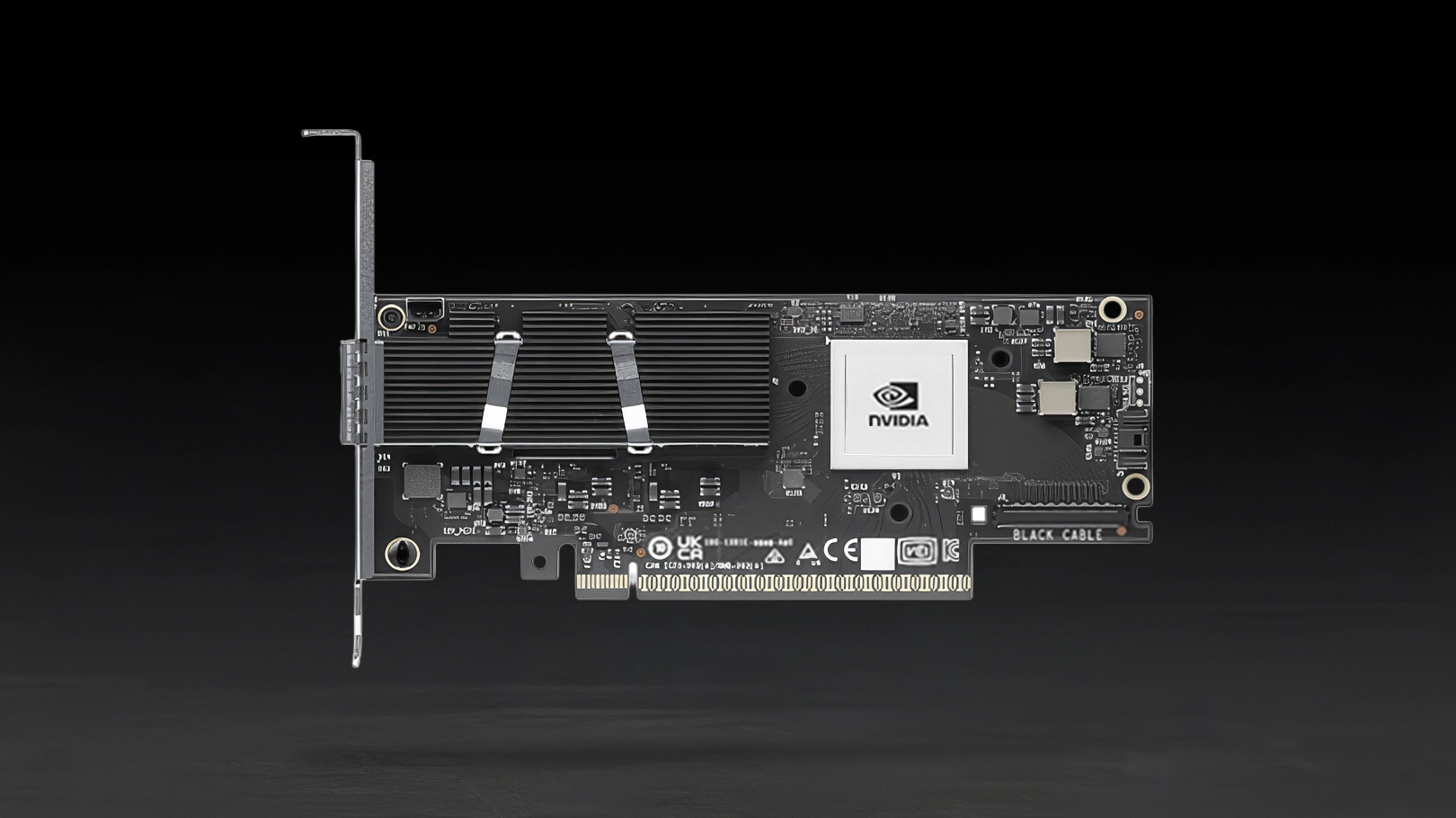

BlueField DPUs

BlueField DPUs offload, accelerate, and isolate software-defined networking, storage, security and management functions. This significantly enhances datacentre performance and efficiency, while also creating a secure, zero-trust environment that, streamlines operations and reduces the total cost of ownership.

MAKE A DPU ENQUIRY

ConnectX Super NICs

Super NICs are a specialised card optimised to accelerate network traffic for AI workloads. They are built around a high-performance network ASIC, making them more streamlined and energy efficient versus a DPU, prioritising GPU-to-GPU communication while minimising CPU overhead and latency.

VIEW SUPER NIC RANGE

ConnectX Smart NICs

NVIDIA Smart NICs deliver speeds of up to 400Gb/s, achieved by employing RDMA or RoCE to remove the latency usually introduced by the CPU, system memory and operating system.

VIEW SUPER NIC RANGE

NVIDIA Quantum Switch Specification Summary

| Family | BlueField | ConnectX | ||||

|---|---|---|---|---|---|---|

| Model | BlueField-4 | BlueField-3 | ConnectX-9 | ConnectX-8 | ConnectX-7 | ConnectX-6 |

| Protocols | InfiniBand / Ethernet | InfiniBand / Ethernet | InfiniBand / Ethernet | InfiniBand / Ethernet | InfiniBand / Ethernet | InfiniBand / Ethernet |

| Ports | 2x OSFP | 2x OSFP | 1x OSFP / 2x QSFP | 1x OSFP / 2x QSFP | 4x QSFP | 2x QSFP |

| Maximum Speed | 800Gb/s | 400Gb/s | 800Gb/s* | 800Gb/s | 400Gb/s | 200Gb/s |

| RDMA / RoCE | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| GPUDirect | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| PCIe | Gen6 | Gen5 | Gen6 | Gen6 | Gen5 | Gen4 |

*1.6TB/s throughput will be available in a Quad ConnectX-9 card for Rubin GPU systems only

To learn more about BlueField and ConnectX network card features, speeds, port types and switch compatibility, read our NVIDIA NETWORKING BUYERS GUIDE.

Interconnects

The NVIDIA LinkX product family of cables and transceivers provides connectivity options for 100-800Gb/s InfiniBand and Ethernet links upward to NVIDIA Quantum and Spectrum architectures for switch-to-switch applications, downward for top-of-rack switch links to ConnectX network adapters, and to BlueField DPUs in compute servers and storage subsystems.

DAC & Splitter Cables

LinkX Direct Attach Copper (DAC) cables are the lowest cost, high-speed links for inside racks up to 3 meters for 100G-PAM4 modulation. DACs offer zero latency delays and power consumption ideal for switch-to-CPU, GPU, and storage subsystems.

VIEW XLINK CABLES RANGE

Active Oxgen Cables

LinkX Active Oxygen Cables (AOCs) are the lowest cost optical interconnect. Fibres are bonded inside connectors are similar to DACs but work at up to 100 meters. Available at 25Gb - 800Gb data rates, NVIDIA Networking has the longest history in the marketplace building AOCs and are widely used in HPCs, hyperscale and enterprise networking and in DGX AI appliances.

VIEW XLINK CABLES RANGE

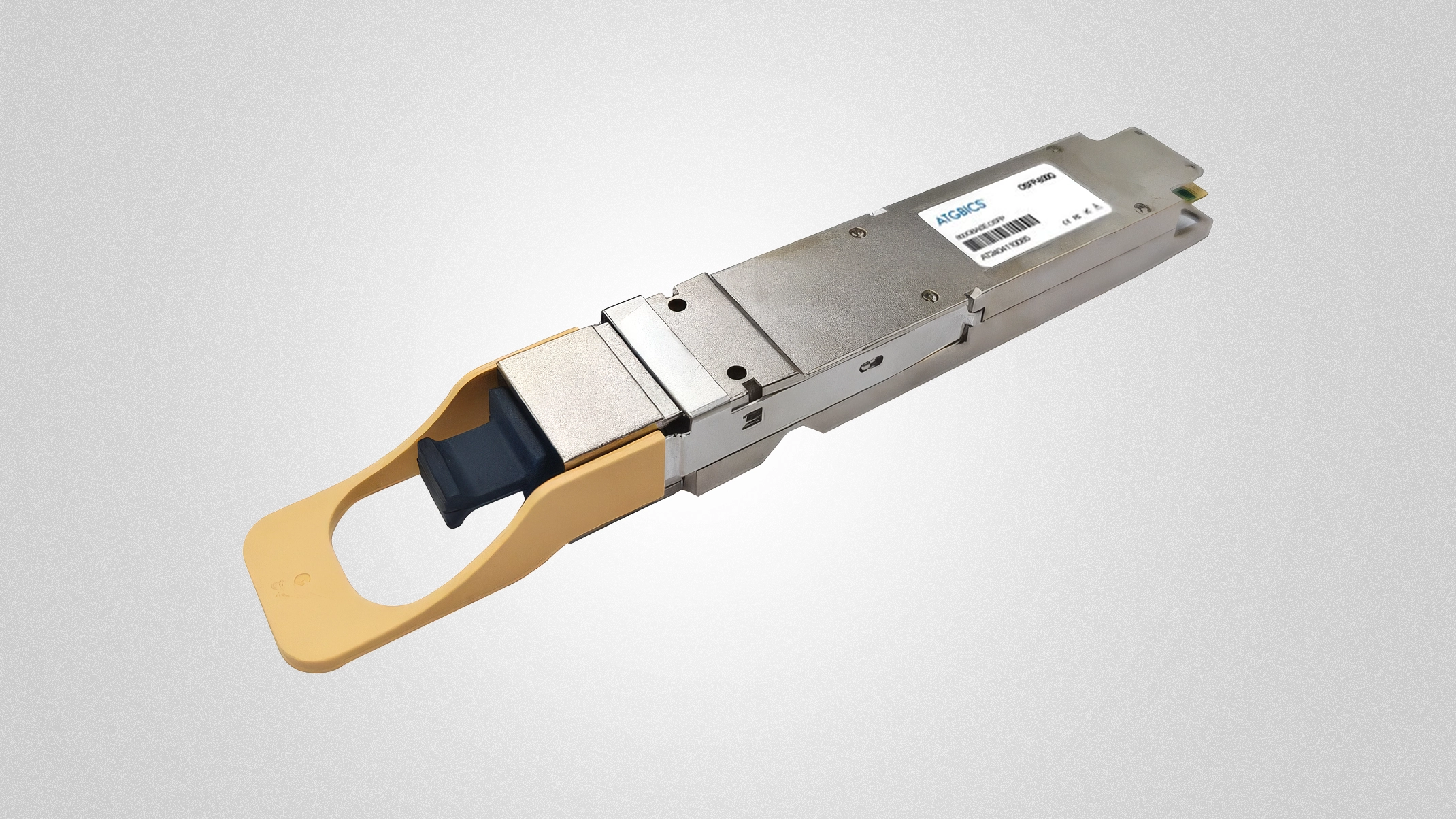

Optical Transceivers

LinkX transceivers are designed to offer multi- and single-mode transceivers from 1Gb - 800Gb in QSFP28, QSFP56, QSFP112 and OSFP formats in 2km, 10km and 40km reaches in both 50G- and 100G-PAM4 modulation. The same transceivers are used in one-piece LinkX AOCs, and DAC cables and splitters.

VIEW XLINK CABLES RANGE

NVIDIA LinkX Interconnect Compatibility

| Speed | SFP56 | QSFP+ | QSFP28 | QSFP56 | QSFP56-DD | QSFP112 | OSFP | MPO |

|---|---|---|---|---|---|---|---|---|

| 50Gb/s | ✓ | ✗ | ✓ | ✓ | ✓ | ✓ | ✓ | ✗ |

| 100Gb/s | ✗ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✗ |

| 200Gb/s | ✗ | ✗ | ✗ | ✓ | ✓ | ✓ | ✓ | ✗ |

| 400Gb/s | ✗ | ✗ | ✗ | ✗ | ✓ | ✓ | ✓ | ✗ |

| 800Gb/s | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | ✓ |

To learn more about network speeds, port types and compatible NVIDIA switches, read our NVIDIA NETWORKING BUYERS GUIDE.

Professional Services

As your network infrastructure grows and evolves, our team of professional services consultants are on hand to help with advice, network design or health checks, installation and configuration.

LEARN MORE

UPS

As your compute hardware or network infrastructure grows and evolves, it is essential to ensure your critical hardware remains protected by adequate uninterruptible power supply (UPS) facilities.

LEARN MORELet's Chat

Contact our AI experts to discuss your projects or infrastructure needs on 01204 474210 or at [email protected]