The Oxford Robotics Institute (ORI) is built from groups of researchers, engineers and students all driven to change what robots can do for us. Industrial collaboration lies at the heart of their research agenda and ORI membership is the vehicle they use to achieve this, accelerating knowledge transfer from the ORI to its industrial members, currently including BP, Oxa, Accenture, Honda, L3 Harris, Navtech Radar and Scan Computers.

The ORI's current research interests are diverse, from flying to grasping - inspection to running - haptics to driving, and exploring to planning. This spectrum of interests leads to researching a broad span of technical topics, including machine learning and AI, computer vision, fabrication, multispectral sensing, perception and systems engineering.

Background

The ORI develops machine learning models for robotics that promote improved actions and interaction from a range of quadruped robots and articulated arms in order to deliver better systems for use in healthcare and manufacturing. The three projects highlighted here are just a selection of the work being undertaken by ORI researchers Jun Yamada and Alexander Mitchell from the Applied Artificial Intelligence Lab, plus teams using GPU-accelerated 3XS AI Development Workstations connected either directly to a robot or to a digital twin simulation environment where training occurs prior to deployment on robotic hardware.

The systems are accelerated by NVIDIA RTX GPUs, powering software applications from NVIDIA GPU Cloud (NGC) or NVIDIA AI Enterprise (NVAIE) platforms. These applications are pre-trained and optimised for use with NVIDIA GPUs, helping accelerate workloads and time to results.

The Projects

Each of the three projects is discussed in further detail in the tabs below.

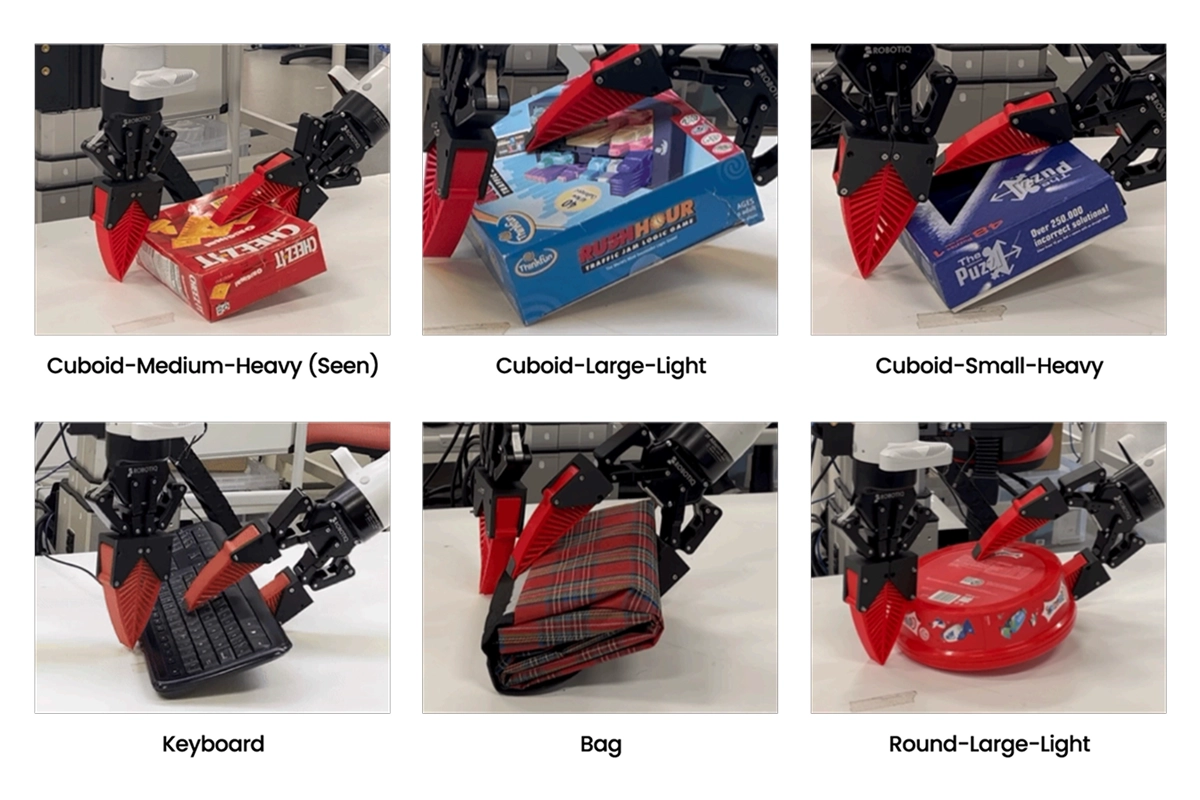

COMBO-Grasp

COMBO-Grasp - Constraint-based Manipulation for Bimanual Occluded Grasping - is a robotic system designed to help two-arm robots grasp objects that are difficult to reach due to their position—such as items lying flat on a table. Inspired by how humans use both hands to reposition and pick up objects, COMBO-Grasp trains a robot to coordinate its arms in a similar way. One arm holds and stabilises the object, while the other moves it into a position that makes it easier to grasp. The system learns this behaviour without requiring thousands of expert demonstrations collected by humans - instead, the robot acquires these skills autonomously. It also improves its coordination by using feedback from its own performance. COMBO-Grasp has been successfully tested in both simulated and real-world environments, demonstrating its ability to handle a wide variety of objects—even those it hasn’t seen before.

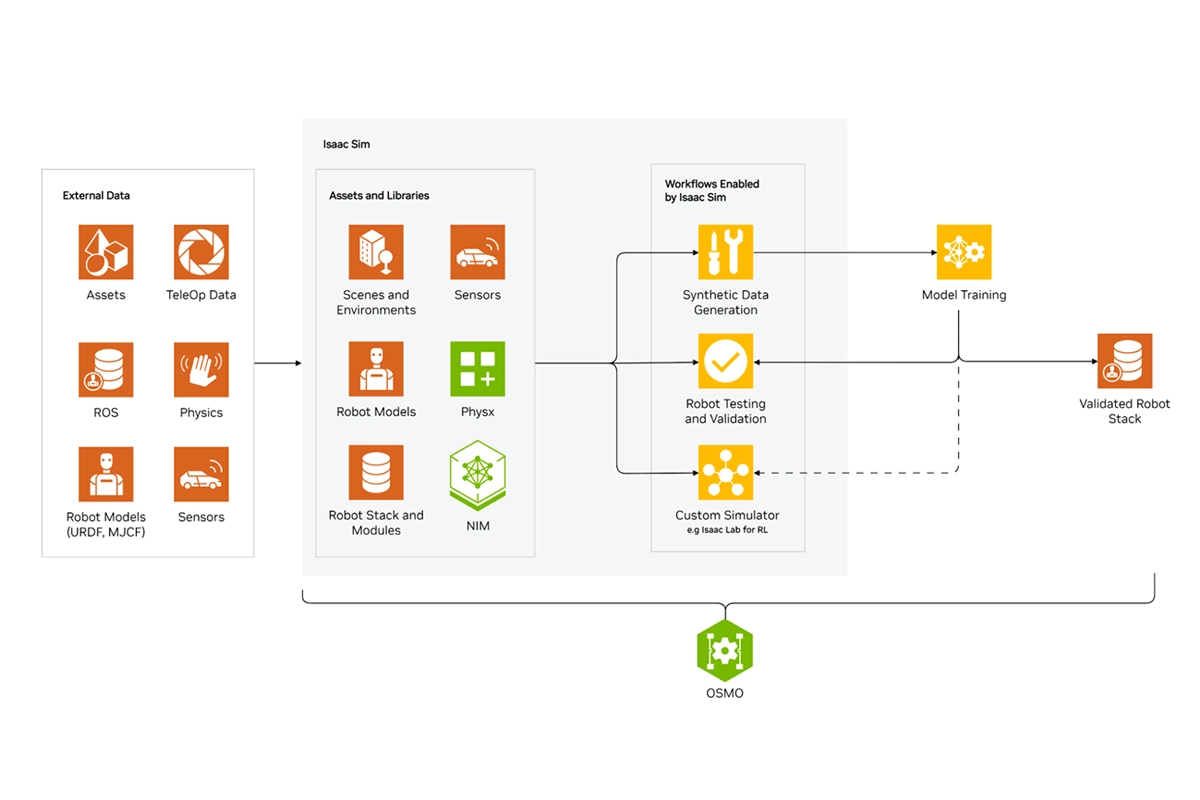

The system comprised two Kinova Gen3 robotic arms mounted perpendicularly to the main body. Each arm is equipped with a Robotiq 2F- 85 gripper. To enhance grasping performance, the grippers were fitted with softer fingertips. Visual observations were captured using a third-person RealSense L515 camera positioned in front of the robot. The training of the COMBO-Grasp algorithm used the NVIDIA Isaac Sim framework - a reference application built on NVIDIA Omniverse that enables developers to develop, simulate, and test AI-driven robots in physically-based virtual environments. The core functionality of Isaac Sim is the simulation itself: a high-fidelity GPU based PhysX engine, capable of supporting multi-sensor RTX rendering at an industrial scale. Isaac Sim’s direct access to the GPU enables the platform to support the simulation of various kinds of sensors including cameras, LiDAR and contact sensors.

COMBO-Grasp effectively tackles occluded grasping tasks for both seen and unseen objects. However, there were some limitations as the system struggles with unseen objects of significantly different shapes, which could be addressed simply by training the policies with a more diverse set of geometries.

Additionally, COMBO-Grasp faces challenges with round objects in the real world, where stabilisation during occluded grasping is difficult, but this issue could be mitigated by further development of the algorithm by the ORI team.

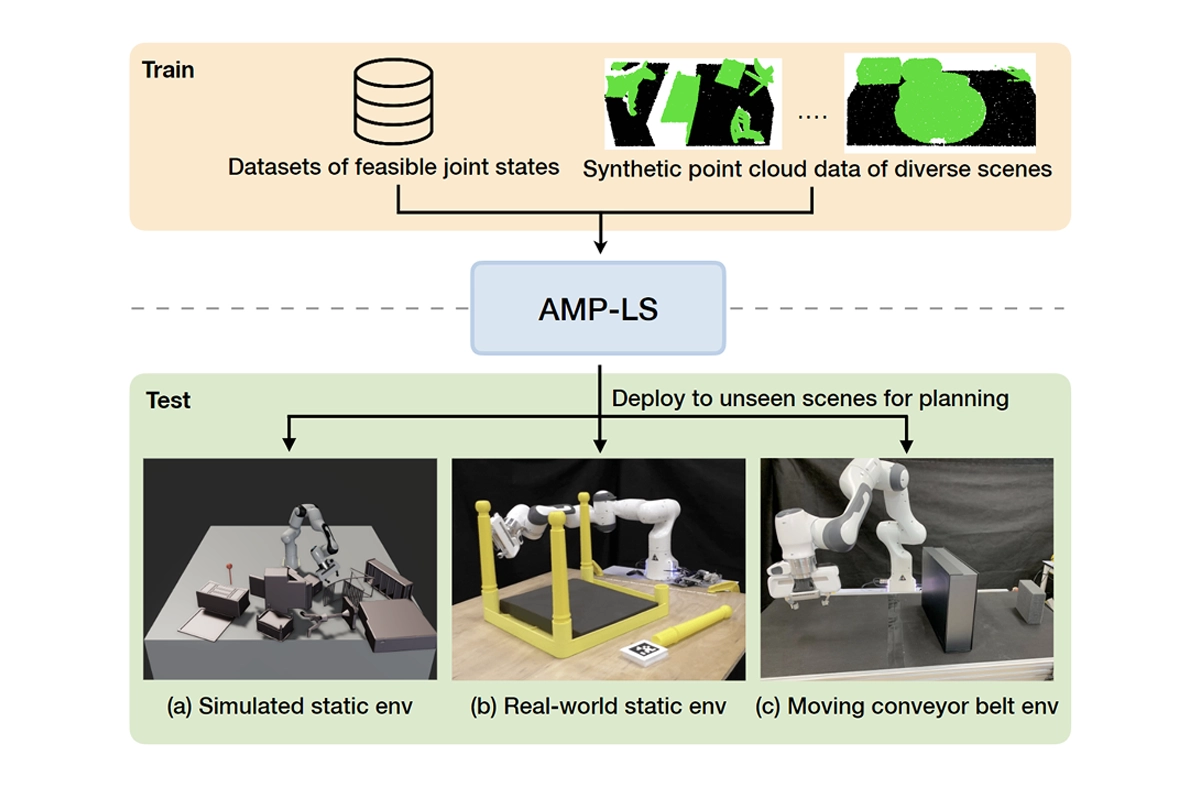

Leveraging Scene Embeddings for Gradient-Based Motion Planning in Latent Space

In this work, the ORI team present AMP-LS (Activation Maximisation Planning in Latent Space), a novel motion planning approach that enables robots to operate efficiently in complex and dynamic environments. Traditional motion planning methods often face challenges in real-time planning capability and planning speed, particularly in cluttered or changing settings. This method addresses these limitations by learning from a diverse set of simulated scenes and kinematically feasible robot poses—rather than relying on expert demonstrations—allowing the robot to reason about obstacle avoidance in novel environments. It also leverages a learned representation of both the robot's movements and its surroundings, enabling fast and flexible trajectory planning, and crucially, supports real-time planning, allowing the robot to adapt its path as targets or obstacles move. The team demonstrate that their approach achieves comparable or better performance than traditional motion planning methods, both in simulation and in real-world scenarios, while offering significantly improved planning speed.

To train the model, a variable auto-encoder (VAE) was used where the encoder and decoder consist of three fully connected hidden layers with 512 units and exponential linear unit (ELU) activation functions. The VAE was trained using kinematically feasible robot joint configurations, generated from the Flexible Collision Library (FCL) for self-collision checking. The VAE model was trained with a batch size of 256 for about 2 million training iterations using the Adam optimiser on the AI Development Workstation’s NVIDIA GPU. Throughout the training, valid robot configurations are generated on the fly as it is cheap to do so. In total, the model is exposed to around 500 million configurations.

To further demonstrate the ability of reactive motion using AMP-LS, the team evaluated their method on a setup where the robot needed to avoid moving obstacles and reach a target object on a conveyor in both simulated and real-world environments. They randomly generated obstacles of different sizes, and the obstacle and a target object are randomly placed on the conveyor belt. They observe that the robot successfully avoided the obstacle and reached a moving target on the conveyor with a success rate of 93.3% (28/30 trials) thanks to the fast planning of this method, as illustrated opposite.

The ORI team demonstrated their learning-based motion planning approach generalises well to unseen obstacles in complex environments. AMP-LS builds upon previous LSPP (Latent Space Path Planning) methods and inherits a number of desirable properties including the introduction of a collision predictor.

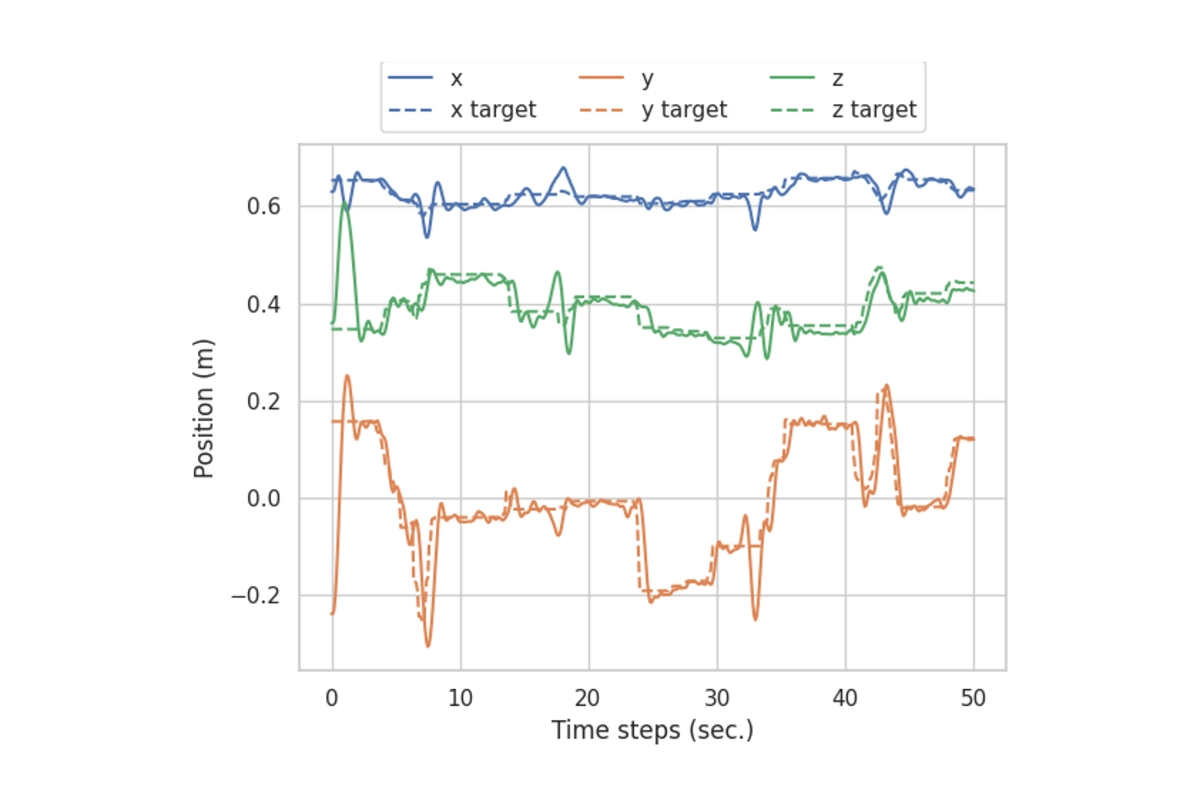

Task and Joint Space Dual-Arm Compliant Control

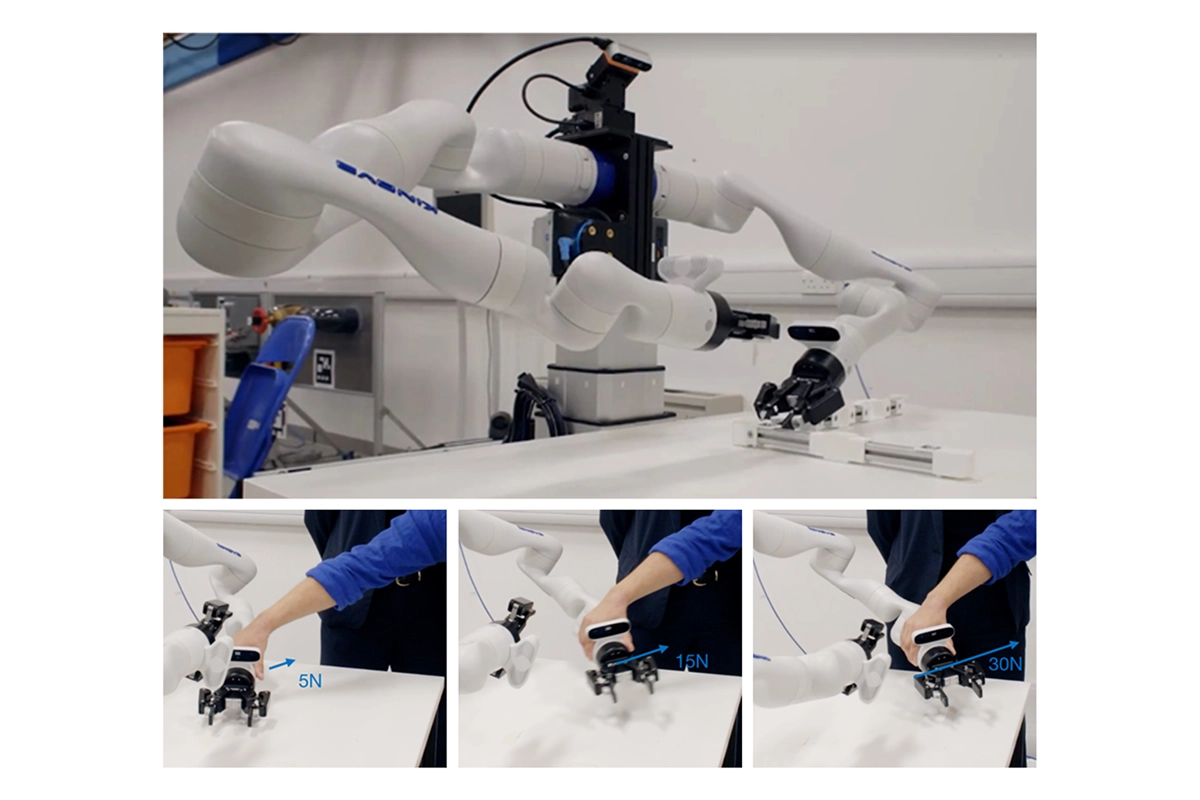

This project addresses a core limitation in robotic manipulation: most industrial robots are rigid and struggle with precision and safety when interacting with people or handling delicate tasks. The ORI has developed a real-time, open- source control system that makes rigid robots behave more compliantly, allowing them to adapt their movements and safely absorb disturbances. The approach blends two modes of control—joint-level and task-level—into a single, unified system. Joint level control focuses on the position of each linkage in the arm relative to a desired position, whereas task level assumes that all we care about is the position of the end-effector (hand/pincer/etc.) and that as long as this is correct, the individual joint positions matter less. This hybrid control enables smooth transitions between coarse, sweeping motions and fine, precise actions such as inserting a small pin into a tight hole. The system also compensates for internal friction using a model-free technique, which further improves accuracy.

The task- and joint-space controller is deployed on a dual-arm manipulation platform comprised of two Kinova Gen3 manipulators mounted along the horizontal axis. The system is real-time capable and integrates with a standard robot operating system (ROS) such as NVIDIA Isaac ROS. It also supports high-frequency trajectory streaming, enabling closed-loop execution of trajectories generated by learning-based methods, optimal control, or teleoperation. The controller is compliant, meaning that it is safe for humans to intervene during operation. The below illustrates how a bystander applies a force of up to 30N to the left robot arm, and simultaneously, the right arm reacts to this disturbance and tracks the disturbance.

The ORI team showed the controller demonstrated both high precision and safe responsiveness to human interference, without needing to restart or reconfigure. The controller was freely available and integrates with common robotics software, making it a practical tool for advanced robotic applications.

The Scan Partnership

The Scan AI team has supported ORI research projects as an industrial member for several years. Scan has provided numerous 3XS Systems AI Development Workstations equipped with NVIDIA RTX GPU accelerators. These are custom designed as desktop solutions for rapid iteration and training of AI models, enabling them to be routinely moved between research labs and connected directly to a range of robot arms or quadrupeds. In addition, the ORI also has access to a cluster of NVIDIA DGX servers, a multi-GPU NVIDIA RTX 6000 server and AI-optimised PEAK:AIO NVMe software-defined storage in the Scan Cloud facility, for scaling of projects and scenarios where required.

Project Wins

Accelerated generation of robot digital twin environments and training of AI models using portable and flexible AI Development Workstations

Increased productivity from a combination of on-premise workstations backed up by a high-performance hosted cloud solution

Ingmar Posner

Principle Investigator & Professor of Applied AI, Applied Artificial Intelligence Lab (A2I) at ORI

"We are delighted to have Scan as part of our set of partners and collaborators who are equally passionate about advancing the real-world impact of robotics research. Integral involvement of our technology and deployment partners will ensure that our work stays focused on real and substantive impact in domains of significant value to industry and the public domain."

Dan Parkinson

Director of Collaboration, Scan

"We are proud of our long standing collaboration with the Oxford Robotics institute, supporting its research efforts with GPU-accelerated AI Development Workstations. It is a huge privilege Division to be associated with such a groundbreaking innovative organisation."

Phil Payseno

Director of 3XS Systems, Scan

"Seeing our custom designed GPU-accelerated systems power ORI research projects and seamlessly integrate into such important workflows makes me immensely proud. Long may our collaboration continue in the hope of positive outcomes for human-robot interaction"

Speak to an expert

You've seen how Scan continues to help the Oxford Robotics Institute further its research into the development of truly useful autonomous machines. Contact our expert AI team to discuss your project requirements.

phone_iphone Phone: 01204 474210

mail Email: [email protected]