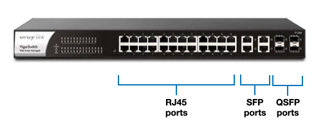

SFP and QSFP Transceiver Modules

For RJ45 Ethernet connections the maximum distance data can be transmitted is 100m, which has limitations when looking at networks in large buildings, campuses or even city-wide. The SFP port allows for fibre optic cabling to be employed, which suffers less data loss and can achieve much higher throughput speeds. It is worth mentioning that although traditionally Ethernet has lagged behind Infiniband speeds, this is now changing, due to increased common SFP interface use by the likes of NVIDIA Networking - perhaps driven by the much larger install base of Ethernet technology in the market and the opportunity for upgrade.

Ethernet Switch

InfiniBand Switch

SFP transceivers offer both multi-mode and single-mode fibre connections (the latter being designed for longer distance transmission) ranging from 550m to 160km. These are older technology standards but still available in either 100Mbps or 1Gbps versions. SFP+ transceivers are an enhanced version of the SFP that support up to 16Gbps fibre throughput. Like SFP multi-mode and single-mode options are available to cover distances up to 160km. SFP28 is a 25Gbps interface which although faster is identical in physical dimensions to SFP and SFP+. SFP28 modules exist supporting single- or multi-mode fibre connections, active optical cable (AOC) and direct attach copper (DAC).

QSFP transceivers are 4-channel versions of SFPs and are available, like SFPs, in a number of versions. Rather than being limited to one connectivity medium, QSFPs can transmit Ethernet and Infiniband. The original QSFP transceiver specified four channels carrying 1GbE or DDR InfiniBand. QSFP+ is an evolution of QSFP to support 10GbE or QDR InfiniBand. The QSFP28 standard carries 100GbE or EDR InfiniBand, with the QSFP56 carrying 200GbE or HDR InfiniBand, and the latest QSFP112 carrying 400GbE or NDR InfiniBand.