Wired Network Cards Buyers Guide

A wired network card is the means by which a desktop PC, workstation or server connects to a wider network. In the home or home office environment, wireless connectivity is more common nowadays and you can learn more by reading our WIRELESS NETWORK CARD BUYERS GUIDE . However, in the business space, wired technology is usually the norm as wired networks offer better security, speeds and simplicity of management. This guide will look at the various types of wired network cards and where they are most commonly used.

Network Interface Card, or NIC, is a general catch-all term, however as we’ll see there are a few other common terms including SmartNIC, SuperNIC, HBA, DPU and IPU. We’ll get to those shortly; however, we’ll start with network protocols.

Networking Technologies and Interfaces

Generally speaking all PCs, workstation and many servers will use Ethernet connectivity and in most cases there is no need to change the NIC from one supplied as standard. In more advanced servers used for big data analysis, HPC and AI workloads, InfiniBand was, and often still is, the protocol of choice.

Ethernet

Ethernet is the most common form of communication seen in a network and has been around since the early 1980s. Over this time the speed of connections has vastly increased. The initial commonly available NICs were capable of 10 megabits per second (10Mbps), followed by 100Mbps and Gigabit Ethernet (1GbE or 1000Mbps). In a corporate network, 1GbE has long been the standard, with faster 10GbE, 25GbE, 40GbE and 50GbE speeds also being available. The last few years have seen speeds of Ethernet increase in line with InfiniBand (due to similar offloading technology), to 100GbE, 200GbE, 400GbE and recently 800GbE.

InfiniBand

InfiniBand is an alternative technology to Ethernet, developed in the late 1990s it is usually used in HPC and AI clusters where high bandwidth and low-latency are key requirements. Although an InfiniBand HBA fits in a server the same way and works in a similar way to an Ethernet NIC to transfer data, they historically have achieved improved throughput by bypassing the server CPU to control data transmission, hence latency is reduced by removing this step. Like Ethernet there have been several generations of InfiniBand starting with SDR (Single Data Rate) providing 8Gbps throughput. This has since been superseded by DDR - 16Gbps, QDR - 32Gbps, FDR - 54Gbps, EDR - 100Gbps, HDR - 200Gbps, NDR – 400Gbps and recently XDR – 800Gbps.

Types of Network Card

Although, as mentioned, the name of a network card - NIC or HBA is related to the networking protocol, in most cases this is superseded by a naming system based more around the functionality of the card. Standard network cards for PCs, workstations and servers are still referred to as NICs, however cards with added intelligence are called SmartNICs and cards that take on much more management functions are termed SuperNICs, DPUs or IPUs. The below video explains further:

NICs

Although only a small component in an overall system build, the NIC can contribute to a huge uplift in performance. Basic PC or workstation NICs start with throughput speeds of 1Gbps (Gigabits per second) through a single port, scaling to 40Gbps at the top end for server use, featuring two or four ports. All processing of data is performed either by the CPU(s) and GPU(s) installed in the server, and thus introduces latency as data is transferred around the server.

Smart NICs

A Smart NIC performs all the tasks of a regular NIC but in order to cope with higher throughput speeds a degree of off-loading reduces pressure on other components in the server. This means the network card itself performs some of the processing tasks, removing the latency usually introduced by the CPU, system memory and operating system. This off-loading is referred to as Remote Memory Direct Access (RDMA) for InfiniBand cards and RDMA over converged Ethernet (RoCE) for Ethernet cards.

Super NICs

While a DPU and a SuperNIC share features and capabilities, SuperNICs are designed for network-intensive computing, providing RoCE network connectivity between GPU servers, optimising peak AI workload efficiency. As the sole purpose of the SuperNIC is to accelerate networking, it consumes less power than a DPU, which requires significant resources to offload applications from the CPU(s).

DPUs / IPUs

DPU or IPUs offer an uplift over SmartNICs or SuperNICs by offloading, accelerating and isolating a broad range of advanced, storage, networking and security services, including GPUDirect Storage, encryption, elastic storage, data integrity, decompression and deduplication. They provide a secure and accelerated infrastructure for HPC or AI workloads in largely containerised environments.

Network Card Port & Types

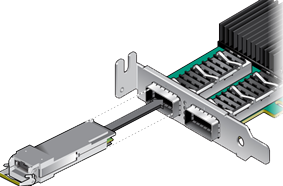

Most standard Ethernet NICs use RJ45 ports connected by copper cables, whereas Ethernet and InfiniBand SmartNICs, SuperNICs and DPUs / IPUs will uses SFP (small form-factor pluggable) or QSFP (quad small form-factor pluggable) ports that use fibre optic cables. Regardless of which port type is used the most common type of card is PCIe format. PCIe is the standard bus found in all PC, workstation and servers.

PCIe card with dual RJ45 ports

PCIe card with dual SFP ports

OCP card with dual SFP ports

As an exception to the universal PICe format, the Open Compute Project (OCP) is an organisation that shares designs of datacentre products and best practices among companies in an attempt to promote standardisation and improve interoperability. The OCP is supported by many large networking manufacturers, including NVIDIA, Cisco and Dell, the result being a standard OCP form factor card - OCP3.0 is the latest version. It is worth pointing out that the OCP affects all datacentre facets, including server design, so an OCP NIC would only be required when using an OCP server format.

Network Card Accessories

Although the network card is typically installed inside the system, the physical connection to network switches is via a cable - depending on the type of protocol and distance between connections a variety of transceiver modules or cable types will be required.

Transceivers

SFP transceivers offer both multi-mode and single-mode fibre optical connections (the latter being designed for longer distance transmission) ranging from 550m to 160km. These are older technology standards but still available in either 100Mbps or 1Gbps versions. Newer SFP+ transceivers are an enhanced version that support up to 16Gbps throughput. QSFP transceivers are 4-channel versions of SFPs but rather than being limited to one connectivity medium, QSFPs can transmit Ethernet or InfiniBand. The original QSFP transceiver specified four channels carrying 1Gbps Ethernet or 5Gbps (DDR) InfiniBand. QSFP+ is an evolution to support four channels carrying 10Gbps Ethernet or 10Gpbs (QDR) InfiniBand. The 4 channels can also be combined into a single 40Gbps Ethernet link. The QSFP28 standard is designed to carry 100Gbps Ethernet or 100Gbps (EDR) InfiniBand. Finally, QSFP56 is designed to carry 200Gbps Ethernet or 200Gbps (HDR) InfiniBand.

Cables

DAC (Direct Attach Copper) cables are the lowest cost type of cabling for SFPs and QSFPs, whereas Active Optical Cables (AOC) are used when wanting to create faster fibre optical links between devices. AOCs are widely used to link servers to memory and storage subsystems because they are consume little power, provide open air flow, low weight and low latency. They typically come in lengths up to 300m and have splitter options available too.

Network Considerations

Although this guide is concerned with wired networking cards there are a couple of related aspects to consider when looking at configuring systems and connecting them to a wider network.

Redundancy

It is possible to connect to a network using a USB dongle too, though usually these are only available using 1GbE rather than any of the other communication protocols. An adapter would fit into any USB port on the system and act to make an Ethernet connection. It is fair to say that this type of network connection would only be employed in the absence of available PCIe slots.

Switches

Network switches create the interconnects from the NICs within servers to each other and also allow server access for all the users on the network from their respective desktop PCs, laptops or workstations. Depending on where switches are deployed within the network, this influences the speed required and communication protocol best suited. SME networks are usually adequate with network speeds of between 1Gbps to 10Gbps, whereas 25-50Gbps speeds are necessitated for large file transfers such as video media. The highest speeds over 100Gbps enable tasks such as HPC and AI. Read our NETWORK SWITCH BUYERS GUIDE.

Ready To Buy?

Browse our range of Network Card options:

RJ45 Network Cards SFP Network Cards External Network CardsIf you would like further advice on the best connectivity solution for your system, don’t hesitate to contact our friendly team on 01204 474747 or contact [email protected]