GTC 2025 Keynote

Watch our Keynote highlights video below for the latest announcements from NVIDIA

Why Work with Scan?

Only UK DGX Managed

Services Provider

Only DGX Cloud Provider

in Europe

Only service provider certified

for both consultancy & hardware

Key GTC Announcements

Day 1 Highlights

Day 2 Highlights

Day 3 Highlights

Day 4 Highlights

Be the First to Know

Discover the next-gen GPUs and AI appliances below; make sure to register your interest to be the first in the queue when more information is available.

RTX PRO Blackwell Workstation GPUs

The latest generation of workstation GPUs for artists, architects, engineers and data scientists. Based on the Blackwell architecture, these new GPUs deliver unparalleled AI performance, up to 3x faster than Ada Lovelace GPUs and support for FP4. Blackwell GPUs are also incredible for ray tracing, rendering up to 2x faster than Ada Lovelace GPUs. Multiple models are available, including the RTX 6000 with a massive 96GB memory, plus the 5000, 4500 and 4000.

RTX Pro Blackwell GPUs are available in 2-, 3- and 4-way configurations in workstations from Scan 3XS Systems.

FIND OUT MORE

DGX Station GB300

The new DGX Station provides datacentre-level performance in a desktop device. This makes it the ultimate appliance for AI developers, researchers and data science teams. Powered by the GB300 Superchip, which combines 72 powerful ARM CPU cores and a Blackwell Ultra GPU, served by a huge 784GB of coherent memory.

As an AI appliance, the DGX Station is preinstalled with DGX Base OS and the full NVIDIA AI software stack enabling, speeding up AI model development.

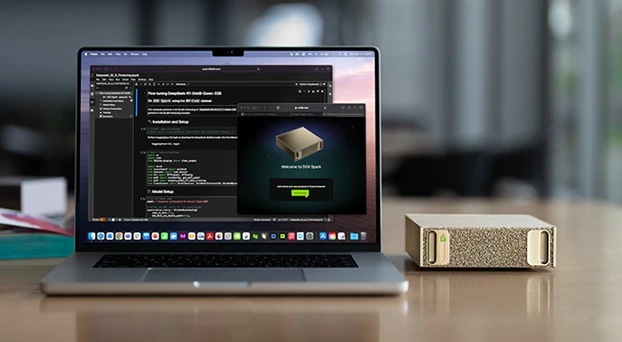

FIND OUT MOREDGX Spark

The growing size and complexity of generative AI models makes development very challenging for traditional AI workstations. The DGX Spark solves this problem, providing up to 1,000 TOPS of AI performance via its Grace GB10 Superchip in a compact desktop device. This combines 20 powerful ARM CPU cores with a Blackwell GPU, served by 128GB of unified memory.

As an AI appliance, the DGX Spark is preinstalled with DGX Base OS and the full NVIDIA AI software stack, speeding up AI model development.

FIND OUT MORE

RTX PRO Blackwell Server GPU

The latest generation of server GPUs for visual and AI workloads. Based on the Blackwell architecture, this new GPU delivers unparalleled AI performance, up to 3x faster than Ada Lovelace GPUs and support for FP4. Blackwell GPUs are also incredible for ray tracing, rendering up to 2x faster than Ada Lovelace GPUs.

The RTX PRO 6000 Blackwell Server Edition GPU with a massive 96GB of memory is available in multiple configurations in NVIDIA EGX servers from Scan 3XS Systems.

Blackwell coming to HGX servers

The next generation of NVIDIA HGX servers will also be upgraded to the Blackwell architecture.

The base configuration, the HGX B200, includes 8 Blackwell GPUs. There will also be a more powerful configuration, the HGX B300 NVL16, with 16 Blackwell Ultra GPUs.

Both configurations are now available from Scan.”

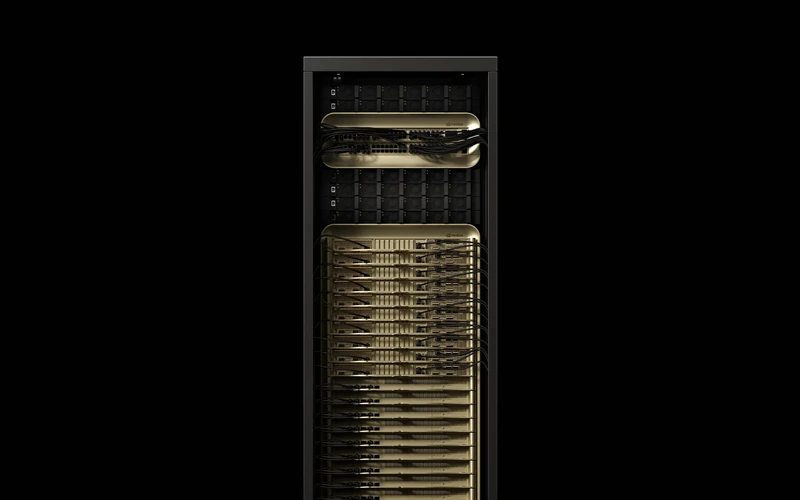

NVIDIA GB300 NVL72 for AI factories

A turnkey AI factory, providing the ultimate in performance for agentic AI and generative AI projects.

Powered by the GB300 NVL72 combines 36 nodes, each with a pair of Blackwell Ultra GPUs and a Grace CPU, working together as unified AI platform. GB300 NVL72 delivers 50x higher performance than previous generation Hopper systems.

The GB300 NVL72 will be available from the second half of 2025 from Scan.

Silicon Photonics Switches

AI factories powered by hundreds of GPUs require more efficient networking than traditional fibre connections. Silicon photonics removes the need for transceivers, slashing power usage by 3.5x while delivering 63x greater signal integrity and 10x better resiliency.

NVIDIA Quantum-X Photonics InfiniBand switches are expected to be available later this year from Scan, with NVIDIA Spectrum-X Photonics Ethernet switches coming in 2026.

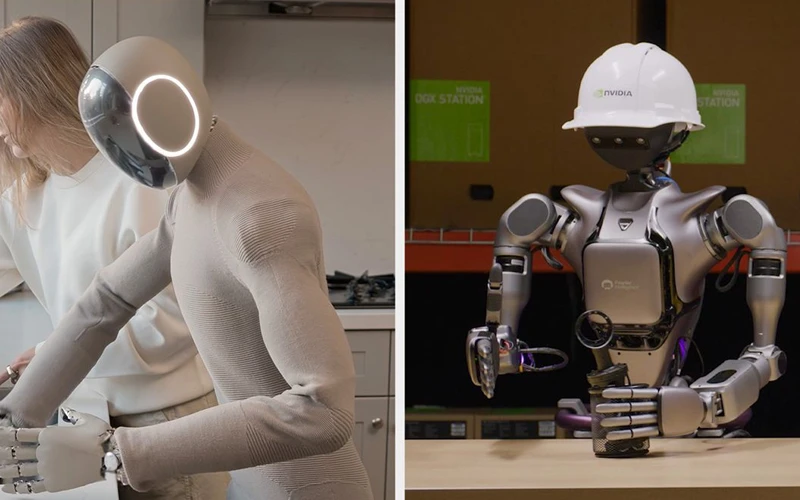

Isaac GR00T N1 & Newton Robotics

NVIDIA announced new software to improve robot development and simulation.

Isaac GROOT N1 is a foundational model for humanoid robots, with two modes: System 1 fast-thinking actions, mirroring human reflexes and intuition and System 2 slow-thinking actions for methodical decision-making.

This is enhanced by Newton, a new physics engine developed in partnership with Disney and Google.

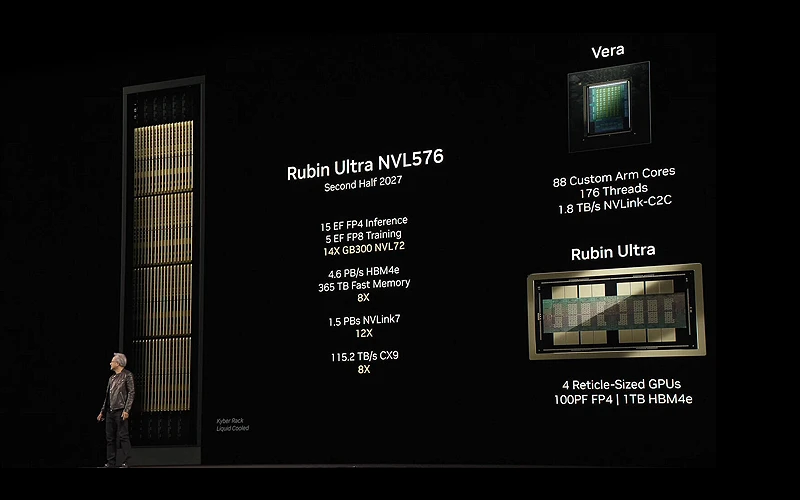

Next-Gen GPUs & CPUs Revealed

NVIDIA also teased the future generation GPU and CPU architectures coming down the line in the next few years.

The next GPU is codenamed Rubin, replacing Blackwell will include multiple GPUs in a single package and up to 1TB of HBM4e memory. Delivering up to 100 petaFLOPS of FP4 performance, products based on Rubin are planned for 2027.

The next CPU is codenamed Vera, replacing Grace will include 88 custom Arm cores with SMT, for a total of 176 threads.

Following these, the next GPU, codenamed Feynman is planned for 2028.

NVIDIA GTC March 2024

The Scan AI team were at GTC showcasing a virtual walkthrough of Lilith, an XR Dance Experience powered by AI technology over the Scan Cloud platform. We also showcased a Specsavers retail store design using NVIDIA Omniverse. See all the highlights in our videos below and learn more about the latest NVIDIA announcements from Jensen’s keynote.

You can also get in touch with the Scan AI team about anything regarding GTC or AI.

Key GTC Announcements

Be the first to know

Discover the next-gen Blackwell GPU architecture and AI appliances below and register your interest to be the first in the queue when more information is available.

Blackwell GPU Architecture

The latest Blackwell GPU architecture has been designed for building and training generative AI models. Blackwell delivers up to 30x faster inferencing, 4x training and has 25x lower TCO than its predecessor Hopper. Multiple different Blackwell-based GPUs will be available from late 2024.

800Gb/s Networking

AI systems need rapid access to data so NVIDIA announced an ecosystem of 800Gb/s networking products, doubling the throughput of today’s fastest networks. Key products include Quantum-X800 InfiniBand and Spectrum-X800 Ethernet switches plus ConnectX-8 SmartNICs, planned for launch in late 2024.

DGX B200

The latest DGX AI appliance, the DGX B200 features eight B200 GPUs with a total of 1.44TB of GPU memory along with two Intel Xeon CPUs and 400GB/s networking. The DGX B200 is expected to launch in late 2024.

HGX B200 & B100

B200 and B100 GPUs will also be available in custom-built GPU servers based on the HGX platform. Expect up to 15x faster inferencing than their predecessors using H100 GPUs. Keep an eye out for these systems in late 2024.

DGX SuperPOD GB200

Delivering the ultimate in performance for LLMs and generative AI projects, SuperPODs feature 36 GB200 Superchips per rack, connected via 5th gen NVLink. Planned availability is late 2024.

NIM Microservices

NIM is a new collection of pre-built containers that massively speed up deploying generative AI projects. NIM is available exclusively via NVIDIA AI Enterprise, bundled as standard with NVIDIA DGX appliances and select GPUs, and also available as a standalone license.

cuOPT Microservice

The CuOPT microservice accelerates operations optimisation by enabling faster and better decisions in areas such as logistics and supply chains. CuOPT is available exclusively via NVIDIA AI Enterprise, bundled as standard with NVIDIA DGX appliances and select GPUs, and also available as a standalone license.

Omniverse Cloud APIs

A new collection of five APIs that extend the capabilities of Omniverse for creating digital twins and simulating autonomous machines such as robots and self-driving vehicles. Expect to see new applications from ISVs such as Ansys, Cadence, Dassault Systèmes, Hexagon, Microsoft, Rockwell Automation, Siemens and Trimble.

Project GROOT

A new general-purpose foundational model designed to drive breakthroughs in robotics and embedded AI. Project GROOT will be accelerated by the new Jetson Thor SOC based on the Blackwell architecture and Isaac framework.

Interviews from GTC

Keynote highlights from previous GTCs

01204 474210