Deep learning hardware

Deep Learning Hardware

Deep Learning uses multi-layered deep neural networks to simulate the functions of the brain to solve tasks that have previously eluded scientists. As neural networks have multiple layers they are best run on highly parallel processors. For this reason, you’ll be able to train your network much faster on GPUs than CPUs as the latter are more suited to serial tasks.

Deep learning expertise

Most Deep Learning frameworks make use of a specific library called cuDNN (CUDA Deep Neural Networks) which is specific to NVIDIA GPUs. So how do you decide which GPUs to get?

There are many NVIDIA GPU options ranging from the cheaper TITAN X workstation oriented cards, to the more powerful Tesla - compute oriented cards, and beyond to the even more capable DGX-1 server. All of these can run Deep Learning applications, but there are some important differences to consider.

GPU Performance

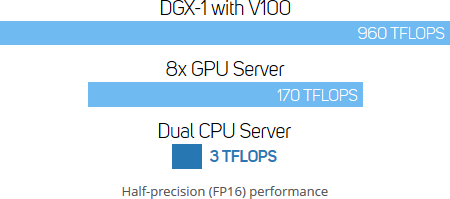

In this graph we can see the dramatic performance difference in teraFLOPs of the DGX-1 Deep Learning server versus a server with eight GPUs plus a traditional server with two Intel Xeon E5 2697 v3 CPUs. Of course you can train Deep Learning Networks without a GPU however the task is so computationally expensive that it’s almost exclusively done using GPUs.

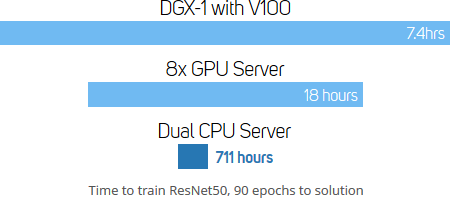

This graph shows the real world benefits in time saved when training on a second-generation DGX-1 versus a server with eight GPUs plus a traditional server with two Intel Xeon E5 2699 v4 CPUs.

Graphics processing unit

Most Deep Learning only requires half precision (FP16) calculations, so make sure you choose a GPU that has been optimised for this type of workload. For instance, while most GeForce gaming cards are optimised for single precision (FP32) they do not run FP16 significantly faster. Similarly, many older Tesla cards such as those based on the Kepler architecture were optimised for single (FP32) and double (FP64) precision and so are not such a good choice for Deep Learning.

In contrast, Tesla are GPUs based on the Pascal architecture can process two half precision (FP16) calculations in one operation, effectively halving the memory load leading to a big speed up in Deep Learning. However, this is not true for all Pascal GPUs, which is why we don’t recommend GeForce cards in our Deep Learning systems. The latest Tesla GPUs are based on the Volta architecture and in addition to CUDA cores also have Tensor cores which are dedicated for deep learning, massively speeding up training time.

GPU differences

GPUs with more cores have more raw compute performance. Tensor cores are a new type of programmable core exclusive to GPUs based on the Volta architecture that run alongside standard CUDA cores. Tensor cores can perform 4x4 Matrix operations in one unit, significantly boosting performance in FP16 and FP32 calculations. For instance, a single Tensor core produces the equivalent of 64 FMA operations per clock, equivalent to 1024 FLOPs per SM, compared to just 256 FLOPs per SM for standard CUDA cores.

Memory Speeds

The latest Tesla V100 GPUs are based on the Volta architecture and utilise (HBM2) High Bandwidth Memory which provides up to 900GB/sec memory bandwidth. In contrast, the Pascal-based TITAN Xp uses slower GDDR5X memory which provides up to 548GB/sec memory bandwidth, which will make a big difference in Deep Learning tasks.

NVLink

NVLink is a high bandwidth interconnect developed by NVIDIA to link GPUs together allowing them to work in parallel much faster than over the PCI-E bus.

NVLink is currently only available in the DGX-1 server, with NVLink helping the first-generation DGX-1 with eight Tesla P100 cards to communicate 5x faster than PCI-E, and is the primary reason for the huge performance difference between the DGX-1 and standard GPU servers.

The second-generation DGX-1 with eight Tesla V100 cards features an improved version of NVLink, with communication between the GPUs boosted up to 10x compared to a standard GPU server. This performance increase is achieved by increasing the bandwidth of NVLink from 20 to 25GB/sec (bidirectional) plus increasing the number of links per GPU from 2 to 6.

This increased throughput enables more advanced modelling and data-parallel techniques for stronger scaling and faster training.

GPU Specifications

| Quadro GP100 | Titan Xp | Titan V | Tesla K80 | Tesla M40 | Tesla P100 (PCI-E) | Tesla P100 (NVLink) | Tesla V100 (PCI-E) | Tesla V100 (NVLink) | |

|---|---|---|---|---|---|---|---|---|---|

| Architecture | Pascal | Pascal | Volta | Kepler | Maxwell | Pascal | Pascal | Volta | Volta |

| Tensor Cores | 0 | 0 | 640 | 0 | 0 | 0 | 0 | 640 | 640 |

| CUDA Cores | 3584 | 3840 | 5120 | 2496 per GPU | 3072 | 3584 | 3584 | 5120 | 5120 |

| Memory | 16GB | 12GB | 12GB | 12GB per GPU | 24GB | 12GB or 16GB | 16GB | 16GB | 16GB |

| Memory Bandwidth | 717GB/s | 548GB/s | 653GB/s | 240GB/s per GPU | 288GB/s | 540 or 720GB/s | 720GB/s | 900GB/s | 900GB/s |

| Memory Type | HBM2 | GDDR5X | HBM2 | GDDR5 | GDDR5 | HBM2 | HBM2 | HBM2 | HBM2 |

| ECC Support | yes | no | no | yes | yes | yes | yes | yes | yes |

| Interconnect Bandwidth | 32GB/s | 32GB/s | 32GB/s | 32GB/s | 32GB/s | 32GB/s | 160GB/s | 32GB/s | 300GB/s |