Our Aim

To provide you with an overview on New And existing technologies, hopefully helping you understand the changes in the technology. Together with the overviews we hope to bring topical issues to light from a series of independent reviewers saving you the time And hassle of fact finding over the web.

We will over time provide you with quality content which you can browse and subscribe to at your leisure.

TekSpek 's

Turing

Date issued:

NVIDIA, the leader in computer graphics, releases new microarchitectures every few years that further the capabilities of graphics cards with respect to performance and features.

Using codenames associated with eminent scientists and mathematicians, recent GPU architectures included Kepler, Maxwell, Pascal and Volta. These base architectures are used for various GeForce cards such as the GTX 6-series, 7-series, 9-series, 10-series, etc. 2018 sees arguably the biggest shift in NVIDIA's design thought process and is known as Turing.

The Turing architecture is the foundation for the GeForce RTX 20-series cards announced in August and available late-September. This TekSpek describes what Turing is, how it works, and what to expect from a performance point of view.

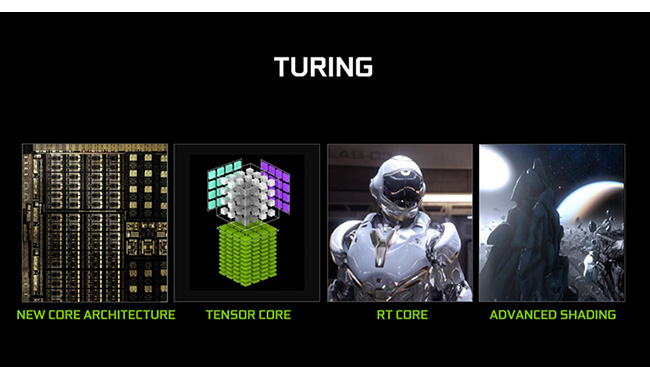

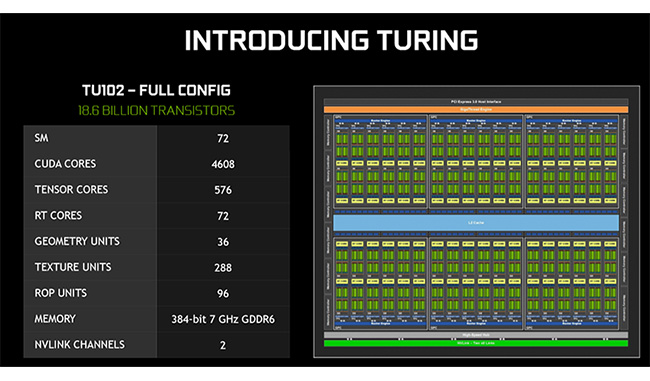

At its heart and understood at a high level, Turing beats last-generation Pascal in a number of important ways. NVIDIA improves practically every facet of the design that impacts upon performance, including reworked shader cores, faster on- and off-chip memory, brand-new Tensor cores that aids artificial intelligence and deep learning, also-new RT cores that promise immaculate image quality through ray tracing, and a number of advanced shading techniques. Quite a roster.

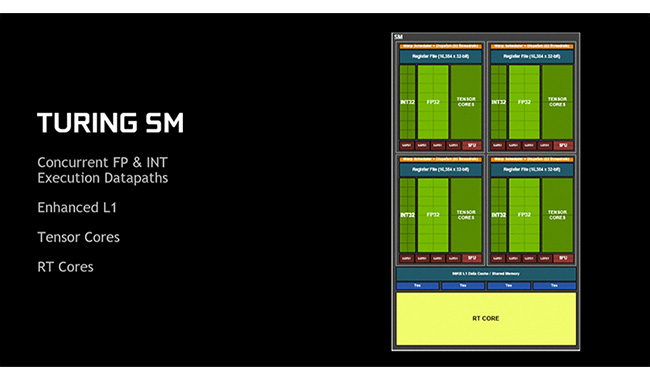

Let's take a closer look at one of Turing's Streaming Multiprocessors, otherwise known as SM. It is home to general shaders and those new Tensor and RT cores. Shaders are the backbone of any GPU and they process instructions that help build up the necessary gaming image. Such instructions can be either floating point (FP) or less-complex integer (INT). NVIDIA has realised that unlike previous GPU designs where one type of core worked on both, it makes sense to split the shader-core so that it can process both concurrently. This separate yet concurrent INT processing frees up the more powerful FP units to run at full capacity, making Turing more efficient than, say, Pascal.

NVIDIA also improves the on-chip cache by increasing its size and making it easier to address, while a number of other, related tweaks help keeps performance moving along nicely. Meanwhile, the external memory speed is also elevated by using brand-new GDDR6 that operates at 14Gbps, up from 11Gbps present on GDDR5X used in the premium GeForce GTX 108x cards.

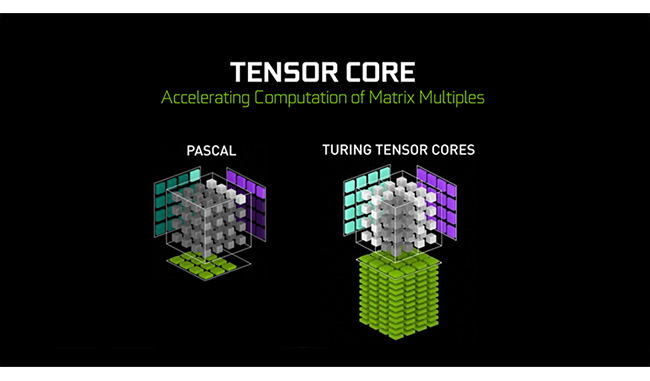

Not seen on a consumer GPU before, Tensor cores are specialised bits of hardware that are really, really good at dealing with the mathematical matrix operations heavily used in the training and inferencing elements of deep learning. It may seem strange to devote GPU real estate to such specific processors but NVIDIA says that deep learning can have a huge impact on improving image quality in games. As an example, NVIDIA is introducing what it calls deep learning super-sampling (DLSS), which is a technique made possible by the implementation of Tensor cores.

DLSS is able to improve the quality of the anti-aliasing - removal of the jagged edges you see around sharp objects - by taking a network trained on offline supercomputers and running it on the Tensor cores. By doing so it can add detail to an existing image by knowing what to look for. Sounds fantastical, doesn't it, but such on-the-fly network processing works if implemented correctly.

There are many other image-related enhancements that Tensor cores can help with, and it will be interesting to see how NVIDIA brings them to the table in the coming weeks and months.

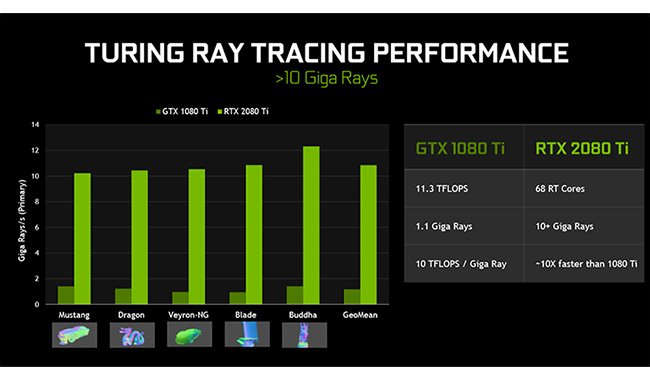

Ray tracing is a compute-intensive technique of producing hyper-realistic graphics. In its simplest form it works by firing a bunch of light rays through a scene, then calculating whether these rays touch a triangle, and lastly to determine the colour of the pixel in which the triangle resides. It is super-accurate as the colour is determined by the intersection of the ray and triangle, so even if you are going through reflections and shadows, the final result will always be physically correct.

Though considered the gold standard of image quality, having real-time ray tracing is problematic because it takes so much power to determine if the ray(s) touch numerous triangles in a scene. Hollywood studios, with almost unlimited processing budget, can take days to render one scene at the highest quality. NVIDIA, on the other hand, is incorporating specialist RT cores whose job it is to determine, as quickly as possible, the necessary ray/triangle intersection point and then hand this information back to the shader core so that it can colour the pixel correctly. The hardware inside Turing is 9x faster than doing it on the shader-core of last-gen Pascal alone, and it is this fact that makes simple ray casting possible.

Even though Turing is much more powerful than any other architecture at calculating ray tracing, it will need to be used intelligently and sparingly by developers. We expect to see it used in instances where perfect shadows are required, or very shiny objects are in the scene. If rasterisation is the cake, ray tracing/casting is the image cherry on top: small yet perfect. Developers need to add in specific ray tracing support into their games engines, and NVIDIA promises that a number of high-profile players are doing just so right now.

NVIDIA is also introducing a number of new variable-shading techniques that aim to speed-up processing without overtly reducing image quality. Imagine a scene where there is little colour change from one frame to the next - panning across the sky, for example. Here, there is no need to continually reshade each pixel as the colour rarely changes. Variable-rate shading takes this concept and is able to selectively reshade areas, at different rates, depending upon how similar each frame is. The same idea is also brought into play for motion, because panning across a scene very quickly enables a savvy developer to reduce the shading rate without dropping resolution. They can do this because the human eye naturally blurs what it is looking at.

The sum of these architectural changes is manifested in a consumer GPU that is truly massive in design. 18.6bn transistors, heaps and heaps of cores, some new, super-fast memory courtesy of GDDR6, and a more efficient design at almost every stage to boot.

Just how well it performs when in product form, which will be the GeForce RTX 2080 Ti, RTX 2080 and RTX 2070, is the real question on everyone's lips. Turing needs the new technologies such as Tensor and RT cores to take off quickly and provide a visually meaningful upgrade to the user experience. But going by specification and potential alone, Turing appears to be a good step in the right direction for NVIDIA.

As always, Scan Computers will be selling a wide range of GeForce RTX graphics cards. You can peruse our selection on our website.