HDR EDUCATIONAL PIECE

What is HDR?

HDR or High Dynamic Range is a term that pops up more and more these days, with everything from TVs to cameras advertising support for HDR, but what really is it?

Dynamic range is the number of “stops” between black and white that something can perceive. With each stop representing a doubling of the amount of light. This range between black and white gives us detail in our images. High Dynamic Range content aims to capture a greater amount of detail, to avoid crushing shadows or clipping highlights. If we are working in with a limited dynamic range where we have to either expose for the dark areas or the highlights, the opposing end of the spectrum will lose details. For example, exposing for our shadows can cause the highlights of an image to “blow out”, resulting in a solid white sky rather than a gradient as we would expect.

We measure light in nits or candelas per square meter (cd/m²). If you’ve shopped for a new TV in the last few years you may have seen nits values of anywhere from 300 up to 1000. With SDR, or standard dynamic range, the current broadcast standard, has a maximum brightness value of 100 nits. With the introduction of HDR, some standards support up to a maximum theoretical brightness of 10,000 nits, which is incidentally the approximate maximum brightness that the human eye can perceive at any given time.

SDR images have a range of roughly 6-7 stops whilst HDR displays support 10 or more stops. Human vision is far more responsive to light than it is to colour and is capable of a range of up to roughly 20 stops. However, the human eyes response to light is not linear, it is logarithmic. Meaning that whilst each stop is a doubling of the light, when we get to very bright scenes, our eyes will only notice a fractional difference.

Linear vs logarithmic

If we were to look at a greyscale, we can view how linear and logarithmic encoding works. The top greyscale is our reference, going from black on the left to white on the right.

The greyscale underneath shows us how linear encoding stores the data. If we assume that we are working with an 8 bit image, we have 256 possible values to store our data. With the first stop being stored in 8 values, the second in 16, the third in 32 and so on, once we reach the higher end of our spectrum, we are using 128 bits between 128 and 256, to store a single stop. This is half of the available data! In contrast we would only be using 8 bits to store the darkest stop. However, our eyes are less sensitive to highlights and as such this distribution of data is not the best way to capture the detail in our scene.

This greyscale shows a logarithmically encoded image. You can see how evenly distributed the data now is.

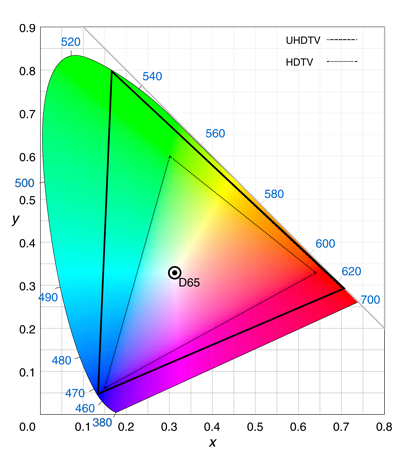

Colour spaces

So, now that we understand how luminance plays its part in an HDR image, where does our chrominance, or colour, fit in. Well due to the extended luminance values, we have the ability to capture and display a wider range of colour tonality. The diagram below shows the SDR Rec. 709 colour space as HDTV and the HDR standard Rec. 2020 as UHDTV. You can see from the plotted points how vast the Rec. 2020 colour space is when compared with it’s SDR counterpart.

As Rec. 2020 is such a large colour space, there are no monitors that can currently display this full gamut.

Bit Depth

An important thing to consider when choosing to work in HDR is the bit depth of your cameras recording format. For HDR, 10 colour bit is the minimum required for both cameras and displays. As HDR standard have a wider colour gamut, our devices must be capable of capturing or displaying this information. If it does not then we will see banding between colour gradients, and not a smooth transition. To put this in perspective, an 8 bit colour depth means that we have 256 values for each of the red, green and blue channels. This gives us over 16 million colours, which seems like a lot until we look at higher bit depths. 10 bit colour gives us 1024 values per colour channel, resulting in over a billion colour tones. At the very high end, some HDR displays support 12 bit colour, with

4096 values per channel we have over 68 billion colours.

Shooting HDR

Unlike the traditional method of creating HDR images, whereby you would take multiple shots each at a different exposures and then combine them later, most modern, professional cameras can shoot 12+ stops making them suitable for HDR workflows. As such it is not a question of whether the camera “supports” HDR but rather, how many stops the camera is capable of capturing and at what bit depth. On top of this you would want to ensure that your camera is capable of recording a wide colour gamut. As such RAW recording is desired if the image is to be colour graded.

Understanding different HDR standards

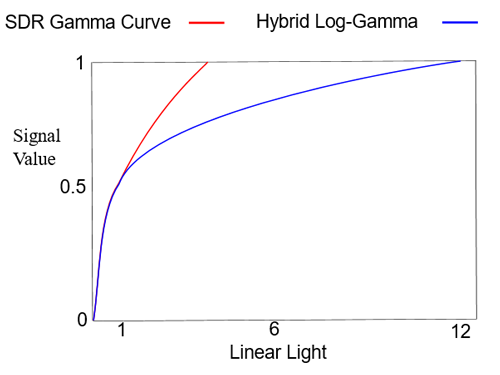

As HDR is still an emerging technology, there are many different standards that may seem very confusing at first. What is the difference between HDR10+, Dolby Vision or Hyrbid Log-Gamma? Well the first thing to understand is what’s known as the EOTF, or Electro-Optical Transfer Function. Although this sounds very complex, it can be simply described as a mathematical function that turns an electronic signal into visible light. This is an digital to analogue conversion that tells the display how many nits particular data should be represented at. Across the main 3 HDR standards there are two EOTFs that are used. Dolby Vision and HDR10+ use the PQ, or Perceptual quantisation, EOTF. The Hybrid Log-Gamma standard uses its own HLG EOTF.

Hybrid Log-Gamma

Hybrid Log-Gamma was developed between the BBC and the Japanese broadcaster NHK. The intent behind its conception was that it would be able to deliver both SDR and HDR signals in a single broadcast stream. By using a slightly modified version of the EOTF used in the SDR Rec. 709 standard, the image will look correct on SDR displays however, if the display supports HDR then the peak luminance level will be stretched. The maximum peak brightness of an HLG signal is 5000 nits however most mastering is done to 1000 nits.

The advantage of HDR is that is does not require multiple streams from the broadcaster, and does not need any additional metadata to be displayed correctly on the consumers TV.

The disadvantage of HLG is that due to the lack of metadata within the HLG standard, it does not support as wide a dynamic range as Dolby Vision or HDR10+.

Dolby Vision

Dolby Vision was developed by the renowned Dolby Laboratories and provides the highest quality of any HDR format. Designed to support up to 10,000 nits, Dolby Vision is mastered with 12 bit colour accuracy in either the Rec. 2020 or P3-DCI, when mastering to 1000 nits. Unlike HLG, Dolby Vision uses dynamic metadata to tell the display the maximum and minimum nit level for each individual frame. When mastering a Dolby Vision HDR file the common convention is to have an SDR monitor present as well. Using either a software or hardware CMU (Content Mapping Unit) it will take the HDR image and map it to an SDR display. You can then perform what is known as a trim pass to tweak the SDR version of your content.

There are several advantages to working with Dolby Vision in that through the use of dynamic metadata the content can be mapped to the display used by the consumer and is mastered in 12 bit at up to 4000 nits, the brightest current Dolby Vision mastering displays can support!

The largest disadvantage of Dolby Vision is that the overall workflow can be quite costly. As you must have a Dolby Vision license to master in this format and a certified Dolby Vision reference monitor, you can end up spending quite a bit before you even sit down to grade! Due to this inherent cost, Dolby Vision is generally aimed towards high-end cinema delivery with large platforms like Netflix and Amazon also mastering to this standard.

HDR10 & HDR10+

HDR10 and HDR10+ are some of the newest HDR standards around. Like Dolby Vision both HDR10 and HDR10+ use the PQ EOTF however there are some differences. HDR10 supports up 1000 nits and uses static metadata, meaning that the tone mapping curve is fixed. This gives HDR10 less dynamic range and can lead to highlights being clipped. HDR10+ on the other hand does use dynamic metadata to map content to the display.

The largest advantage of HDR10 and HDR10+ is that they are open source and as such do not require an expensive license to master in.

The largest disadvantage of HDR10 (not HDR10+) is that it is not backwards compatible. This means that you would need to perform both an HDR and an SDR grade, lengthening the time spent in post production and in turn increasing the cost. It is also worth noting that both HDR10 and HDR10+ do not support above 1000 nits.

MaxFALL and MaxCLL

When working with HDR you may see either the terms MaxFALL or MaxCLL. MaxFALL, or Maximum Frame Average Light Level, is metadata that defines, in nits, the maximum average light level in a given frame.

MaxCLL, or Maximum Content Light Level, defines in nits, the maximum light level of any single pixel within an HDR frame.