AI application performance is determined by three major elements: GPUs, data storage and the network. As GPU compute performance continues to rise at an astounding rate they consume and analyse data at much higher rates than many storage solutions can deliver, resulting in low utilisation of expensive GPU resources and dramatically extended project times or result outcomes.

Although professional-grade GPUs have large inbuilt memory capacities, large volume external storage is required for training data and to handle the many iterations an AI model may evolve through. Traditional storage systems, although capable of immense scale, lack the high-throughput, low-latency attributes required for multi-GPUs and are often bloated with large operating systems packed with advanced data management features that are simply not needed when considering AI workloads.

PEAK:AIO AI Data Servers

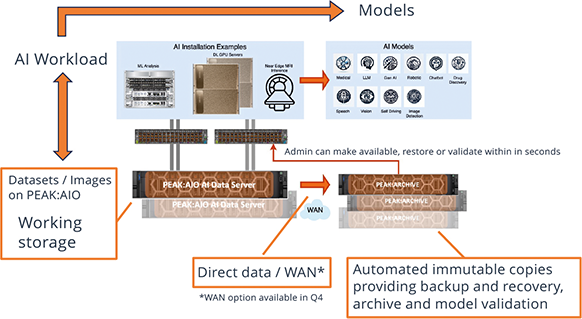

PEAK:AIO is a software defined storage (SDS) platform designed from the ground up to meet the specific needs of AI applications. Deployed on server technology from the likes of Dell, PEAK:AIO creates an AI Data Server that delivers the ultra-low latency and incredible bandwidth needed to optimise GPU utilisation. Using GPUDirect and RDMA protocols across NFS, NVMe-oF and S3, PEAK:AIO AI data servers are capable of delivering up to 160GB/sec per 2U nodes to the GPU compute, outperforming some of the largest multi-node solutions, in a simple cost-effective 2U footprint.

As AI projects evolve, PEAK:AIO enables organisations to start as small as 80TB and grow seamlessly up to 3PB per 2U node as projects increase in scale, simply by adding drives or expanding nodes.

PEAK:AIO dramatically reduces the cost of high-performance AI data storage, enabling a larger percentage of budget to be spent on GPU compute resource, providing a more effective overall solution, faster project results and greater return on investment. PEAK:AIO also aids more sustainable business practices by reducing the impact of growing AI power demands by as much as 6:1.

PEAK:INFERENCE

PEAK:INFERENCE, available later this year from Scan and PEAK:AIO, is a fully integrated solution designed for real-time AI inference in environments where traditional IT infrastructure is either unavailable or undesired. By combining GPU compute, high-performance storage, and the deployed AI model into a single server, it offers everything needed to run private, on-premises inference with no external dependencies. This makes it ideal for use cases such as company-specific GPTs, real-time decision-making in clinical or field settings, and in environments requiring fast, secure, in-house AI processing.

With the optional integration of Scan Cloud, organisations can also replicate data centrally for ongoing training or long-term history, ensuring that insights generated at the edge or in remote locations can continuously feed and refine core models. PEAK:INFERENCE eliminates the complexity and latency of traditional infrastructure while enabling efficient, scalable, and secure AI deployment at the point of need.

PEAK:ARCHIVE

There is also a PEAK:ARCHIVE option to address the need for regulatory and audit compliance - particularly in sectors such as healthcare, finance and legal, where data provenance and accuracy are critically examined. The PEAK:ARCHIVE solution provides traceability of AI decisions back to their source data, key for issue diagnosis and outcome understanding, and protects immutable datasets safeguard against tampering.

In addition, PEAK:ARCHIVE is designed to integrate with industry-standard backup platforms such as Veeam and Commvault, providing further flexibility for organisations with existing data protection policies.