Arm Processors

Flexible and adaptable processor solutions for servers

Arm CPU technology has long been the processor of choice for smartphones and tablets and is commonplace in other embedded devices where low power consumption is a key consideration. However, in the last few years with evolving enterprise workloads such as HPC and AI, ARM CPUs have begun to be more attractive in a server environment. This shift has come about due to the different way an ARM-based processor works as opposed to a traditional x86 server CPU from Intel and AMD.

A New Approach for New Workflows

In brief, the reason why Arm processors have dominated the battery-powered and low-powered markets is that they are RISC-based rather than CISC-based in their design. CISC (complex instruction set computer) designs and are compatible with Intel’s original 8086 processor from the late 1970s - any CPU based on this original design is termed an x86 processor. Although a processor born in 70s may not seem very cutting edge this initial design has been updated and superseded many times to get to the modern 64-bit x86 Intel Xeon and AMD EPYC processors we see today. On the other hand Arm CPUs use a RISC (reduced instruction set computer) design - evolving, from the original Acorn and BBC Micro computers, over multiple generations since the 1980s - to the Arm CPUs we have today.

| CISC-based Design | RISC-based Design |

|---|---|

| Complex Instructions | Simple Instructions |

| High number of Instructions (100-300) | Low number of Instructions (30-40) |

| Variable length or size of instructions | Fixed length or size of instructions |

| Multiple actions per instruction | One action per instruction |

| High power consumption (100W+) | Low power consumption (5-10W) |

| Complex hardware - Simple software | Simple hardware - Complex software |

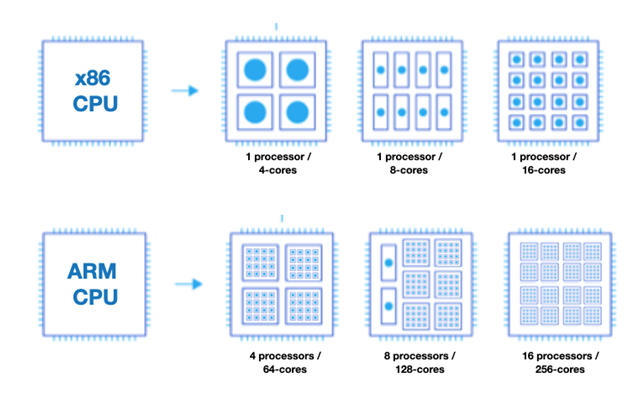

As RISC-based processors use simpler instruction sets, with lower volumes of single action instructions they ultimately consume less power, so are ideal for devices powered predominantly by batteries or ones that draw very little power. In x86 Xeon and EPYC CPUs, the complex instruction sets are processed across a number of cores - starting at 8 cores in entry models and up to 128 in models at the high end. This scaling of cores results in an increase in performance by improving the speed and power to handle more demanding computing workloads. By comparison, an Arm CPU employs many smaller, less sophisticated, low-power processors that each have multiple cores themselves so the compute tasks are actually shared across hundreds of micro processors. This method of increasing performance is sometimes referred to as scaling out, and can result in an Arm server delivering greater processing power while at the same time using less energy and requiring less cooling than a x86-based equivalent. It also allows for different types of cores in the CPU to handle different workloads.

This difference in approach is further enhanced when we consider server CPU generations are refreshed every two to three years, as opposed to smart phone and tablet CPUs being updated at least once a year to maintain demand in a very competitive market. This increased cycle of development has led to much more evolution in the Arm space than with x86 over the last decade resulting in ever smaller CPU architectures. Typically x86 CPUs have gone from 14nm to 10nm and recently to 7nm whereas Arm-based CPUs have gone through 14, 10, 7, 5 and 4nm designs in the same time. Each time the transistors are shrunk a greater number can fit into the CPU increasing its overall performance, making Arm CPUs even more attractive to the server market where there is an increase in software-defined technologies and applications that require smaller but more numerous tasks such as high performance computing, machine learning, deep learning and AI.

Tailor your cores to your workload

Although we may refer to Arm-based servers, unlike Intel or AMD, Arm is not the manufacturer of these CPUs. Brands such as Ampere, NVIDIA and Qualcomm license and develop Arm-based CPU using a combination of the below cores depending on which workloads they want to address with that processor. It may be that a specific core was originally designed for mobile use, has an particular advantage or useful characteristic when applied to a server application or workflow.

Cortex-A Cores

The ARM Cortex-A is a group of 32-bit and 64-bit processor cores optimised for supreme performance at the most cost-effective price.

Cortex-A715

Second-generation Armv9 “big” CPU for best-in-class efficient performance

• The CPU cluster workhorse across "big.LITTLE" configurations

• Targeted microarchitecture optimizations for 20% power efficiency improvements

Cortex-A710

First-generation Armv9 “big” CPU that offers a balance of performance and efficiency

• Addition of ARMv9 architecture features for enhanced performance and security

• 30% increase in energy efficiency compared to Cortex-A78

Cortex-A510

First-generation Armv9 high-efficiency “LITTLE” CPU

• Large performance increases for a highly efficient CPU

• Innovative microarchitecture upgrades

• Over 3x uplift in ML performance compared to Cortex-A55

Cortex-A78

The fourth generation high-performance CPU based on DynamIQ technology. The most efficient premium Cortex-A CPU

• Built for next generation consumer devices

•Enabling immersive experiences on new form factors and foldables

• Improving ML device responsiveness and capabilities such as face and speech recognition

Cortex-A78C

Providing market-specific solutions with advanced security features and large big-core configurations

• Performance for laptop class productivity and gaming on-the-go

• Advanced data and device security with Pointer Authentication

• Improved scalability with up to 8 big core only configuration and up to 8MB L3 cache

Cortex-A78AE

Arm’s most advanced processor designed for safety-critical applications

• Suited to complex automated driving and industrial autonomous systems

• Split-Lock capability with hybrid mode for flexible operations

• Enhanced support for ISO 26262 ASIL B and ASIL D safety requirements

Arm CPUs and 3XS Servers - the Perfect Partnership

The 3XS team of server architects have developed a range of rackmount solutions based on Ampere Altra processors - built with Arm architecture - to tackle high demand compute-bound workloads. Based on Gigabyte chassis the Ampere CPUs offer improvements in total cost of ownership (TCO) by running more efficiently per core and at a lower price per core. 1U and 2U servers feature a single socket Altra or Altra Max processor with up to 128 Armv8.2 cores, a 3.0GHz frequency and 128 PCIe 4.0 lanes, with support for up to 4TB RAM.

|

|

|

|

| 1U Server | 2U Server | 4U Server | 4-node 2U Server |

| Up to 2x Ampere Altra or Ampere Altra Max CPUs |

Up to 2x Ampere Altra or Ampere Altra Max CPUs |

Ampere Altra or Ampere Altra Max CPU | Up to 2x Ampere Altra or Ampere Altra Max CPUs |

| - | Up to 2 GPUs | Up to 8 GPUs | Up to 4 GPUs |

| For edge computing and SDN | For AI training, AI inferencing and HPC | For AI training, AI inferencing and HPC | For HPC, HCI and cloud computing |