Get in touch with our IT team.

Intel Xeon Scalable

Server Platforms

Performance made flexible, next-gen

Intel server CPUs

Intel Xeon Scalable CPUs

Since its initial release in 2017 Intel has continued to develop new versions of its Xeon Scalable server CPUs. Currently there are three versions available, the latest 5th gen based on Emerald Rapids architecture, the 4th gen based on the Sapphire Rapids architecture and the value-orientated 3rd gen based on the Ice Lake architecture. Click on the tabs below to explore each generation.

Intel 5th Gen Xeon Scalable Platform

Building on the 4th gen CPUs, Intel 5th gen Xeon Scalable processors powered by the Emerald Rapids architecture deliver market leading IT infrastructure. Intel 5th gen Xeon Scalable processors deliver more compute and faster memory at the same TDP as the previous generation. Their software and platform are compatible with the 4th gen, so there is minimal testing and validation when deploying new systems.

VIEW SOLUTIONSIntel Emerald Rapids Architecture

Cores

Up to 64 cores per socket

I/O

80 lanes of PCIe 5.0 for GPUs, SmartNICs, DPUs & NVMe SSDs

Memory

Support for up to 8TB of 8-channel DDR5 and Intel Optane Memory

Intel 5th gen Xeon Scalable Line Up

Intel Xeon Platinum 8500

High-end dual-socket servers for the most demanding workflows

Cores: up to 64

Level 3 Cache: 60-320MB

Max memory speed: 5,600MHz

Intel Optane Memory support

TDP: 300-350W

Architecture: Emerald Rapids

Intel Xeon Gold 6500

Mid-range dual-socket servers for everyday workflows

Cores: up to 32

Level 3 Cache: 22.5-60MB

Max memory speed: 5,200MHz

Intel Optane Memory support

TDP: 195-270W

Architecture: Emerald Rapids

Intel Xeon Gold 5500

Mid-range dual-socket servers for everyday workflows

Cores: up to 28

Level 3 Cache: 22.5-52.5MB

Max memory speed: 4,800MHz

Intel Optane Memory support

TDP: 165-205W

Architecture: Emerald Rapids

Intel Xeon Silver 4500

Entry-level dual-socket servers for everyday workflows

Cores: up to 24

Level 3 Cache: 22.5-45MB

Max memory speed: 4,400MHz

TDP: 125-185W

Architecture: Emerald Rapids

Intel Platinum 8500U, Gold 5500U, Bronze 3500U

Designed for single-socket servers for everyday workloads

Cores: up to 48

Level 3 Cache: 22.5-260MB

Max memory speed: 4,800MHz

TDP: 125-300W

Architecture: Emerald Rapids

Intel Xeon Platinum 8500N, Gold 6500N

High-end single and dual-socket servers for networking optimised workflows

Cores: up to 52

Level 3 Cache: 60-300MB

Max memory speed: 5,200MHz

Intel Optane Memory support

TDP: 205-300W

Architecture: Emerald Rapids

Intel Xeon Platinum 8500P/V

High-end single and dual-socket servers for cloud optimised workflows

Cores: up to 64

Level 3 Cache: 300-320MB

Max memory speed: 5,600MHz

Intel Optane Memory support

TDP: 270-330W

Architecture: Emerald Rapids

Intel Xeon Platinum 8500Q, Gold 6500Q

High-end dual-socket liquid cooled servers

Cores: up to 64

Level 3 Cache: 60-320MB

Max memory speed: 5,600MHz

Intel Optane Memory support

TDP: 350-385W

Architecture: Emerald Rapids

Intel 5th gen Xeon Scalable Features and Benefits

Trusted performance. Exceptional efficiency.

Intel 5th gen Xeon CPUs mark a new chapter in the Xeon story, offering up to 21% performance gains for the same TDP, compared to the 4th gen CPUs. Building on the fixed function accelerators which speed up specific tasks - IAA, DSA, DLB and QAT, there are workload specific SKUs for networking, cloud and liquid cooling. The new Emerald Rapids architecture features support for 8-channels of 8TB of DDR5 memory with 80 lanes of PCIe 5.0, providing double the bandwidth of PCIe 4.0. Finally, the core count to be increased from 60 to 64 cores and the maximum memory speed is now 5,600MHz.

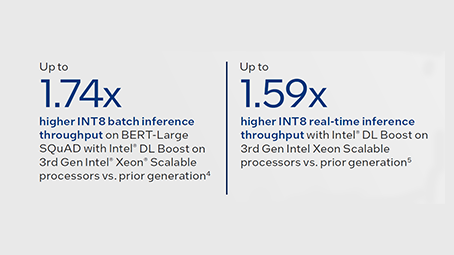

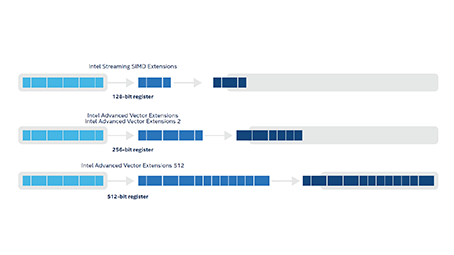

Accelerated AI

Intel AMX, Advanced Matrix Extensions, has been enhanced in the 5th gen Xeon CPUs driving up to 2.7x better AI inference performance. Fine-tune deep learning models or train small to medium models in just minutes. Intel AMX delivers discrete accelerator performance without added hardware and complexity and excels in transfer learning and retraining, so you can keep your models current.

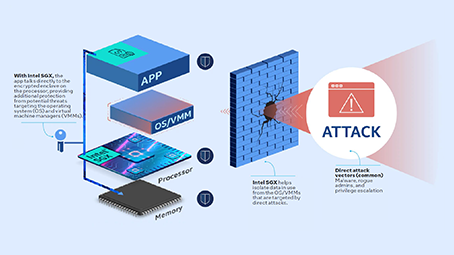

Class Leading Security

Intel Trust Domain Extensions (TDX) offers isolation and confidentiality at the virtual machine (VM) level. Within an Intel TDX confidential VM, the guest OS and VM applications are isolated from access by the cloud host, hypervisor, and other VMs on the platform. Intel TDX offers a simpler migration path for existing applications to move to a trusted execution environment (TEE).

Unrivalled Storage Performance

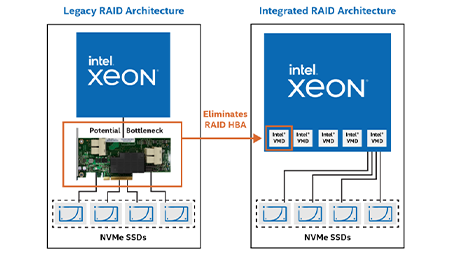

Intel Virtual RAID on CPU (VROC) is an enterprise RAID solution that unleashes the performance of NVMe SSDs, enabled by a feature in Intel Xeon Scalable processors called Intel Volume Management Device (Intel VMD), an integrated controller inside the CPU PCIe root complex. Intel VROC enables these benefits without the complexity, cost, and power consumption of traditional hardware RAID host bus adapter (HBA) cards placed between the drives and the CPU.

3XS Systems Server Solutions

As an Intel Platinum partner Scan has developed a range of server solutions powered by the Intel Xeon Scalable platform. Built by our award-winning in-house 3XS Systems division these solutions are optimised for a wide variety of workflows.