NVIDIA DGX Station A100

Workgroup appliance for the age of AI

High Performance Computing for the Workgroup

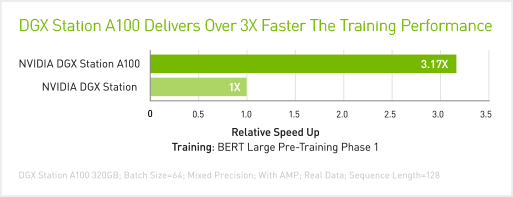

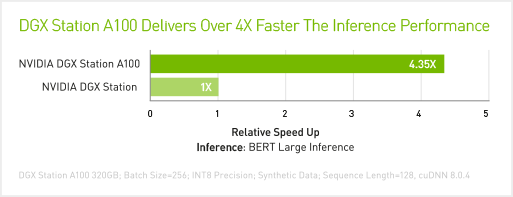

Data science teams are at the leading edge of AI innovation, developing projects that can transform their organisations and our world. As such these teams need a dedicated AI platform that can plug in anywhere and is fully optimised across hardware and software to deliver groundbreaking performance for multiple, simultaneous users anywhere in the world. The DGX Station A100 introduces double-precision Tensor Cores, providing the biggest milestone since the introduction of double-precision computing in GPUs. Designed for multiple, simultaneous users, DGX Station A100 leverages server-grade components in an office-friendly form factor. It's the only system with four fully interconnected and Multi-Instance GPU (MIG)-capable NVIDIA A100 Tensor Core GPUs with up to 320GB of total GPU memory that can plug into a standard power outlet, resulting in a powerful AI appliance that you can place anywhere.

AI Ideation Workshops with Scan & NVIDIA

Join us for a day long workshop to evaluate your current AI strategy, goals and needs

Find out moreThe worlds first free-standing AI system built on NVIDIA A100

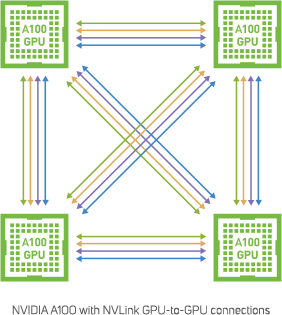

The NVIDIA DGX Station A100 provides datacentre-class AI server capabilities in a workstation form factor, suitable for use in a standard office environment without specialised power and cooling. Its design includes four ultra-powerful NVIDIA A100 Tensor Core GPUs - with either 40 or 80GB of GPU memory; a 64-core server-grade CPU, NVMe storage, and PCIe Gen4 buses. The DGX Station A100 also includes a Baseboard Management Controller (BMC) allowing system administrators to perform any required tasks over a remote connection. The four NVLink interconnected GPUs, deliver 2.5 petaFLOPS of performance and support multi instance GPU (MIG), offering 28 separate GPU devices for parallel jobs and multiple users without impacting system performance.

|

GPUs 4x A100 80GB (320GB total) |

|

Memory 512GB DDR4 |

|

GPU Interconnects NVLink |

|

Storage OS: 1x 1.92TB NVMe drive Internal storage: 7.68TB U.2 NVMe drive |

|

CPU Single AMD EPYC 7742, 64-cores, 2.25GHz – 3.4GHz |

|

Networking Dual-port 10GbE LAN, Single-port 1GbE BMC management port |

|

Displays 4GB GPU memory, 4x Mini DisplayPort |

|

Cooling Water cooled |

|

Power 1.5kW |

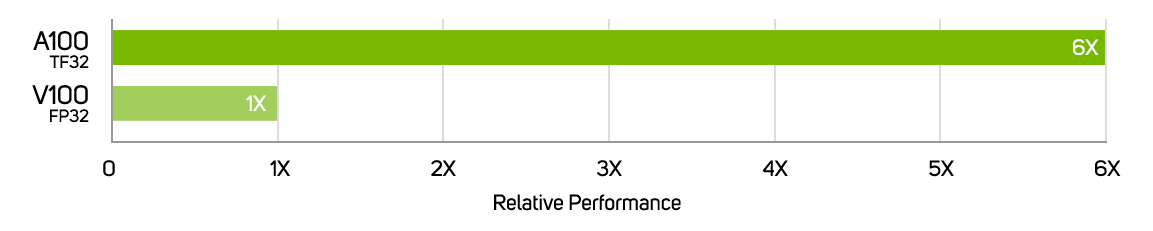

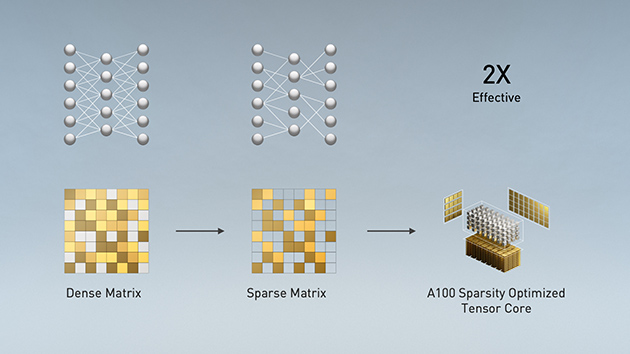

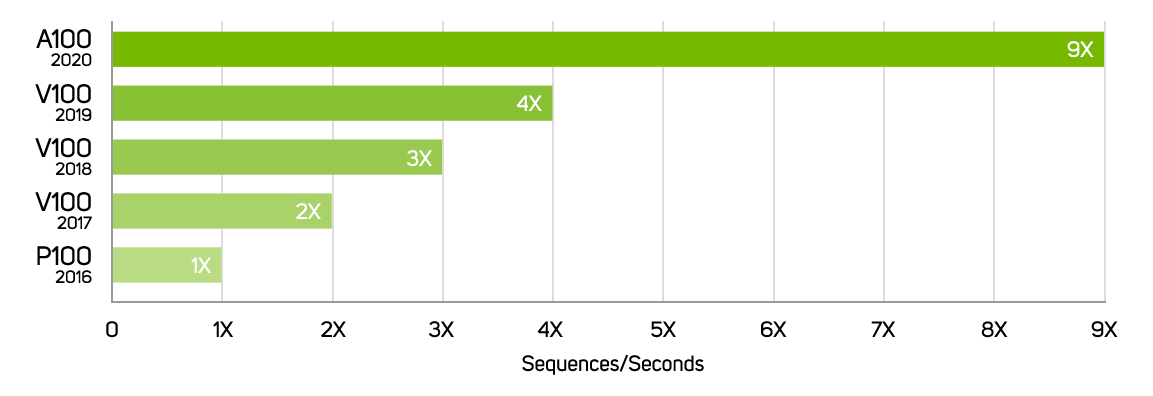

The NVIDIA Ampere architecture, designed for the age of elastic computing, delivers the next giant leap by providing unmatched acceleration at every scale The A100 GPU brings massive amounts of compute to datacentres. To keep those compute engines fully utilised, it has a leading class 1.6TB/sec of memory bandwidth, a 67 per cent increase over the previous generation DGX. In addition, the DGX A100 has significantly more on-chip memory, including a 40MB Level 2 cache—7x larger than the previous generation—to maximise compute performance.

The NVIDIA GPU Cloud

The NGC provides researchers and data scientists with simple access to a comprehensive catalogue of GPU-optimised software tools for deep learning and high performance computing (HPC) that take full advantage of NVIDIA GPUs. The NGC container registry features NVIDIA A100 tuned, tested, certified, and maintained containers for the top deep learning frameworks. It also offers third-party managed HPC application containers, NVIDIA HPC visualisation containers, and partner applications.

Proof of Concept

Sign up to try one of the AI & Deep Learning solutions available from Scan Computers

Register for PoC >Protect your Deep Learning Investment

NVIDIA DGX systems are cutting-edge hardware solutions designed to accelerate your deep learning and AI workloads and projects. Ensuring that your system or systems remain in optimum condition is key to consistently achieving the rapid results you need. Each DGX appliance has a range of comprehensive support contracts covering both software updates and hardware components, coupled with a choice of media retention packages to further protect any sensitive data within your DGX memory or SSDs.

Learn more| DGX Station | |

|---|---|

| GPUs | 4x NVIDIA A100 Tensor Core GPUs |

| GPU Specifications | 6912 CUDA cores / 432 TF32 Tensor Cores per GPU |

| GPU Memory | 80GB per GPU - 320GB total |

| GPU Interconnects | NVLink |

| CPU | AMD EPYC 7742P - 64 cores / 128 threads |

| System Memory | 512GB ECC Reg DDR4 |

| System Drives | 1x 1.92TB NVMe SSD |

| Storage Drives | 7.68TB u.2 NVMe SSD |

| Networking | 2x 10GBE LAN ports / 1x 1GbE BMC management port |

| Operating System | Ubuntu Linux |

| Power Requirement | 1.5kW |

| Dimensions | 256 x 639 x 518mm (W x H x D) |

| Weight | 43.1Kg |

| Operating Temperature | 5°C - 35°C (41°F - 95°F) |